System Design ByteByteGo Videos.

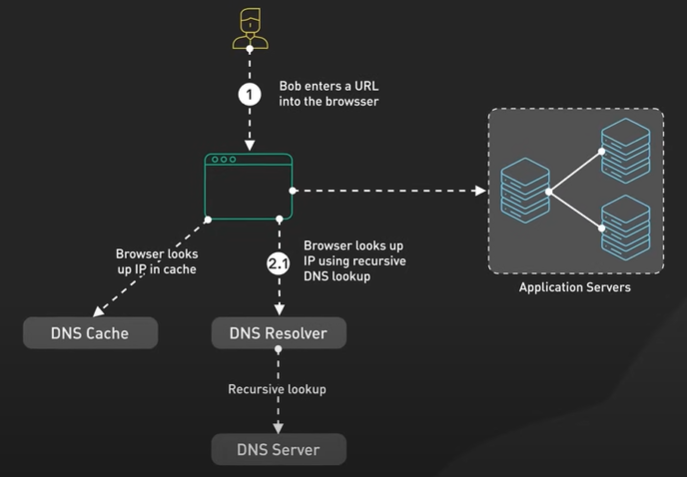

What happens when you type a URL into a browser?

https://youtu.be/AlkDbnbv7dk?si=ng-q1p3bEIXeurCM

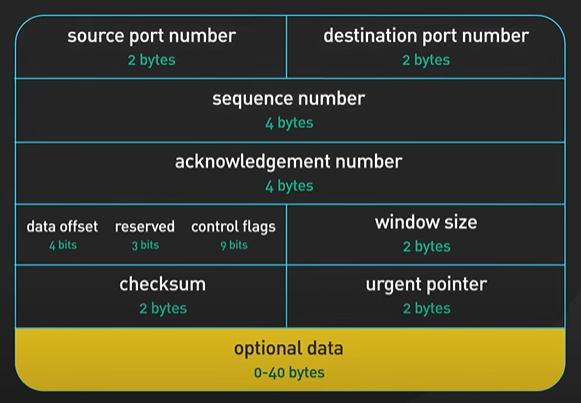

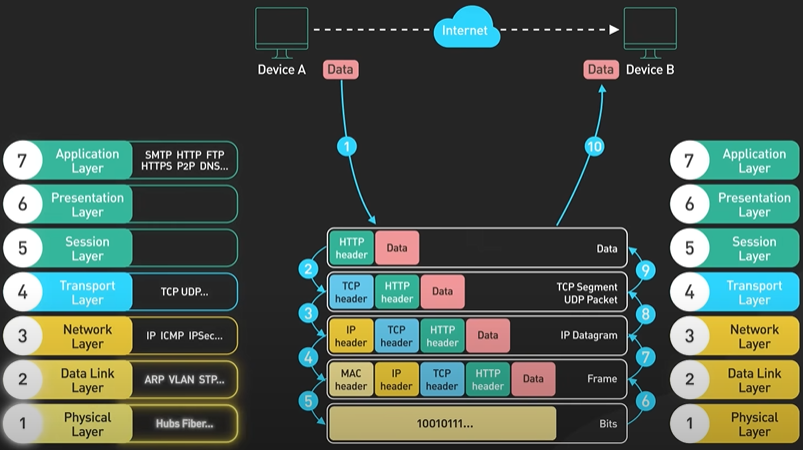

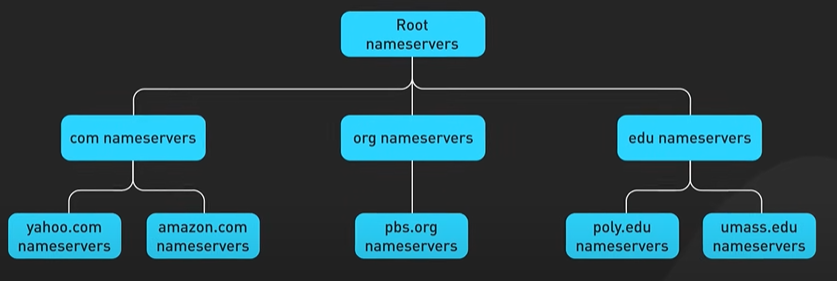

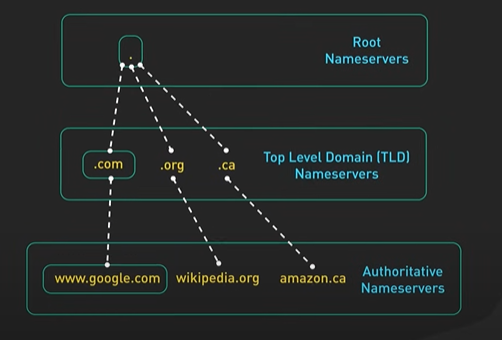

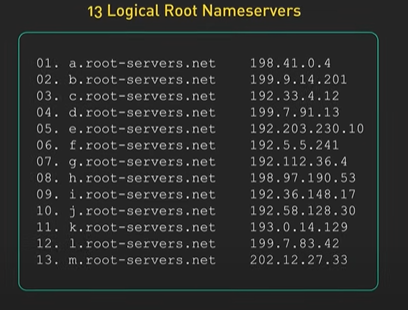

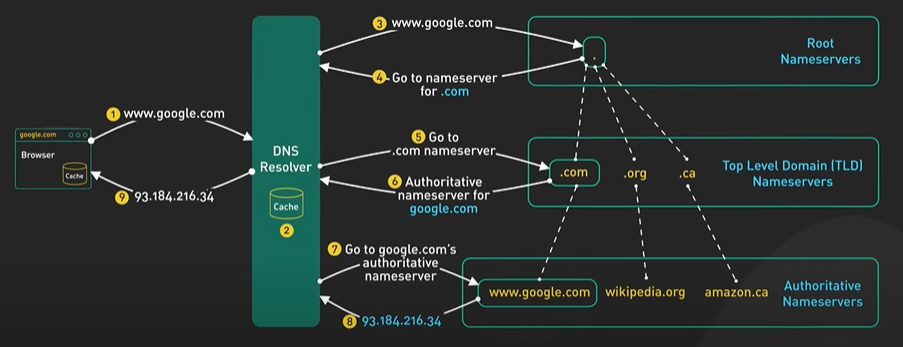

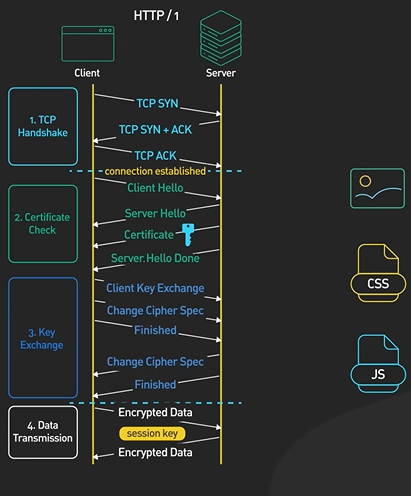

Hi, welcome to the first episode of ByteByteGo system design video series. In these videos, we Here in this example, Bob enters a URL into the browser and hits enter. What happens next? Well, let’s first discuss what a URL is. URL stands for universal resource locator, and it has four parts. First is scheme. In this example, it is http://. This tells the browser to connect to the server using a protocol called HTTP. Another common scheme is HTTPS. With HTTPS, the connection is encrypted. The second part of the URL is a domain. In this example, example.com. It is the domain name of the site. The third part of the URL is path, and the fourth is resource. The difference between these two is often not very clear. Just think of them as directory and file in a regular file system. They together specify the resource on the server we want to load. So okay, Bob entered the url into the browser. What happened next. Well, the browser needs to know how to reach the server, in this case example.com. This is done with a process called DNS lookup. DNS stands for domain name system. Think of it as a phone book of the internet. DNS translates domain names to IP addresses so browsers can load resources. It is an interesting service in and off itself that deserves a dedicated video that we should make later. Now to make the lookup process fast, the DNS information is heavily cached. First the browser itself caches it for a short period of time. And if it is not in the browser cache the browser asks the operating system for it. The operating system itself has a cache for it… which also keeps the answer for a short period of time. Now if the operating operating system doesn’t have it, it makes a query out to the internet to a DNS resolver. This sets off a chain of requests until the IP address is resolved. This is an elaborate and elegant process. Just to keep in mind that this process involves many servers in the DNS infrastructure and the answer is cached every step of the way. Again we’ll discuss this in detail in another video. Now finally the browser has the IP address of the server. In our case, again, example.com. Next, the browser establishes a TCP connection with the server using the IP address it got for it. Now there’s a handshake involved in establishing a TCP connection. It takes several network round trips for this to complete. To keep the loading process fast, modern browsers use something called a keep- alive connection to try to reuse an established TCP connection to the server as much as possible. One thing to note is that if the protocol is HTTPS, the process of establishing a new connection is even more involved. It requires a complicated process called SSL/TLS handshake to establish the encrypted connection between the browser and the server. This handshake is expensive and the browsers use tricks like SSL session resumption to try to lower the cost. Finally, the browser sends an HTTP request to the server over the established TCP connection. HTTP itself is a very simple protocol. The server processes the request and sends back a response. The browser receives the response and renders html content. Oftentimes there are additional resources to load, like javascript bundles and images. The browser repeats the process above that we mentioned - making DNS lookup, establishing TCP connection, making HTTP requests - to finish fetching all the other resources.

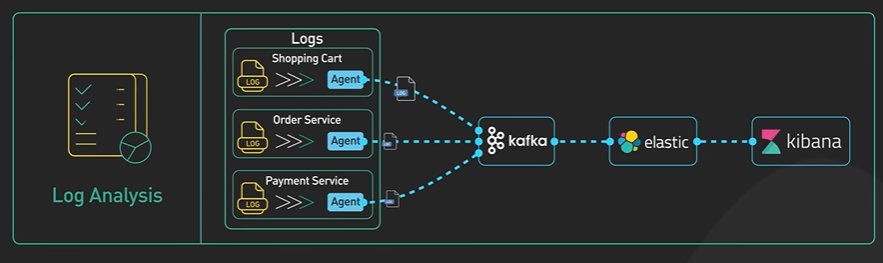

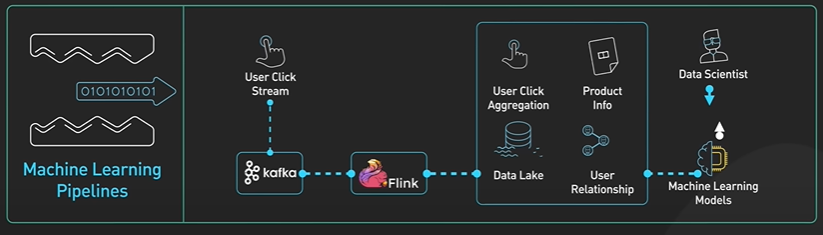

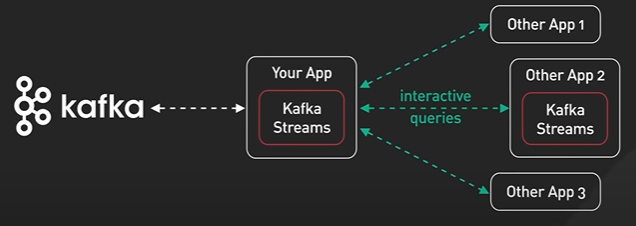

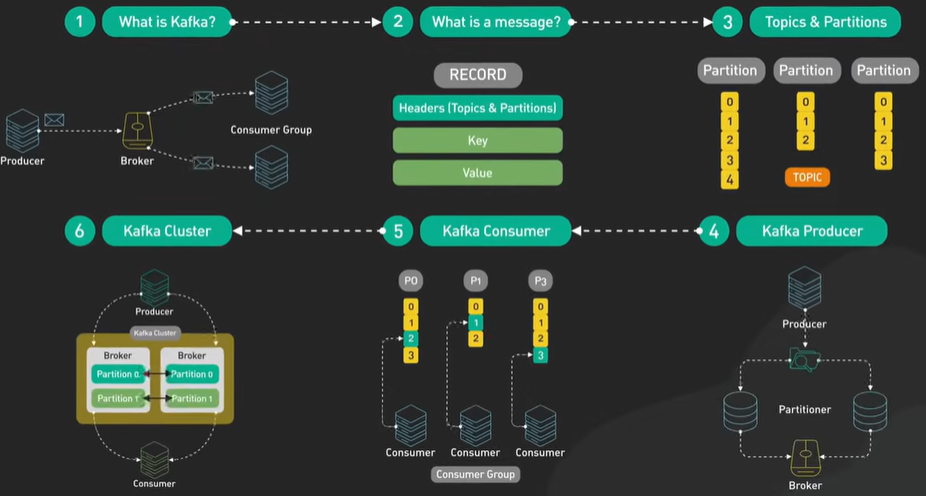

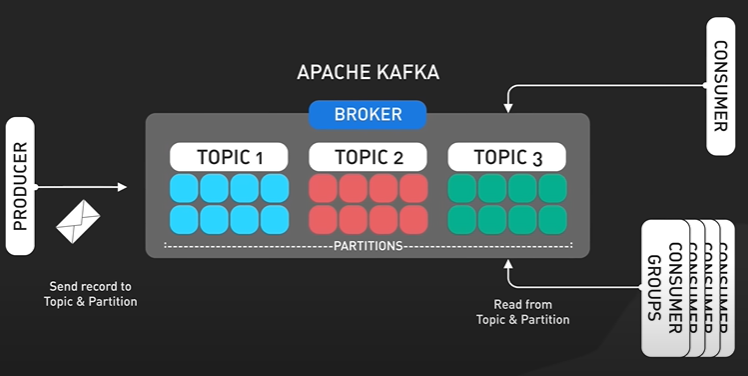

Why Kafka is fast?

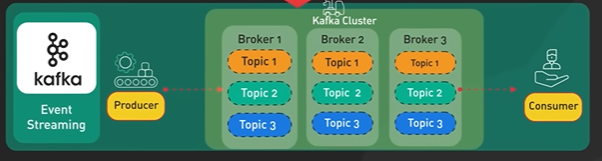

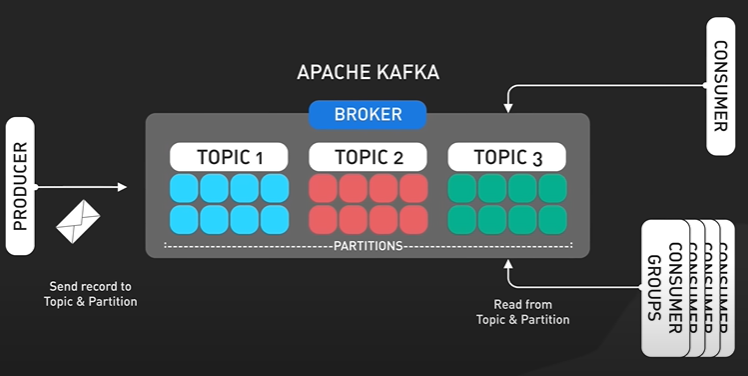

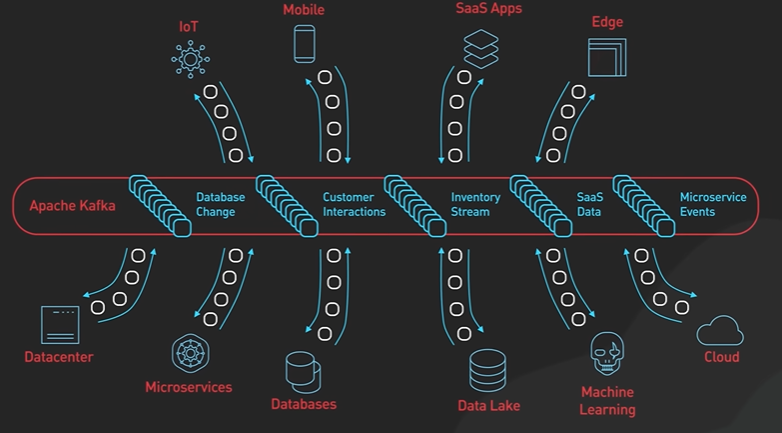

Kafka is designed for high throughput and it is designed to process large amount of message in a short amount of time.

Design decisions helps Kafka to move large amount of data quickly.

Kafka follows Sequential IO - There is a misconception that disk operation is slow compared to memory access.

It depends on the data access pattern. There are 2 types of disk access patterns - random and sequential.

In hard drives it takes time to physically move the arm to different location on the magnetic disk. It makes the random access slow.

In sequential it gets the data one after the other and it fast.

Kafka takes the advantage of sequential access and using the append only log.

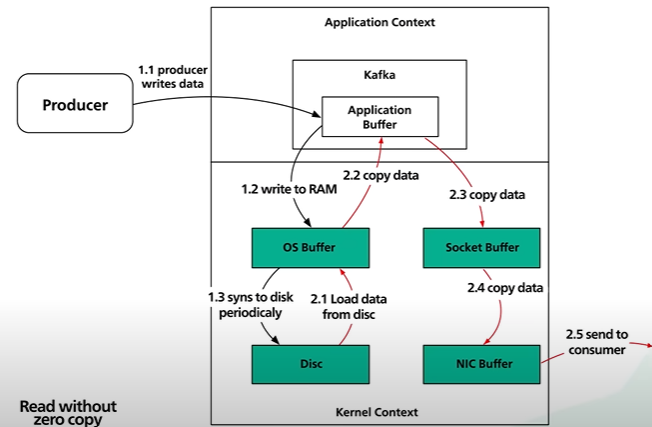

Kafka move data from network to disk and it removes copy. It follows the zero copy principle.

Kafka send data to the consumer - Data is loaded from disk to the OS cache. Data copied from OS cache into Kafka application. Data copied from Kafka to the socket buffer and from socket buffer to the Network Interface Card NIC buffer. The data is send over the network to the consumer.

There are total of 4 copy of data and 2 system calls. Not efficient.

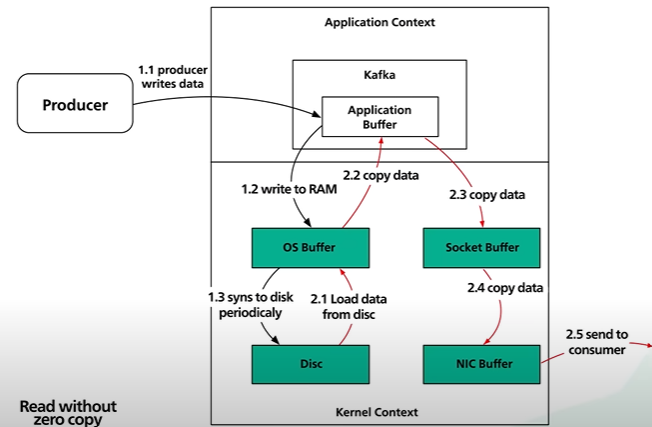

Kafka with zero copy.

The data page id loaded from the disk to the OS cache. With zero copy Kafka uses the system call called sendfile() to tell the OS to copy the data from the OS cache to the NIC buffer.

In the process the only copy is from the OS cache to the NIC buffer.

In the modern network card it is done by DMA - Direct Memory Access. DMA used and CPU is not involved and the process is efficient.

Sequential IO and DMA and zero copy principle are the corner stone of the Kafka high performance.

How to store password in the database.

Password is not stored in plain text anyone having the database access can easily read the password.

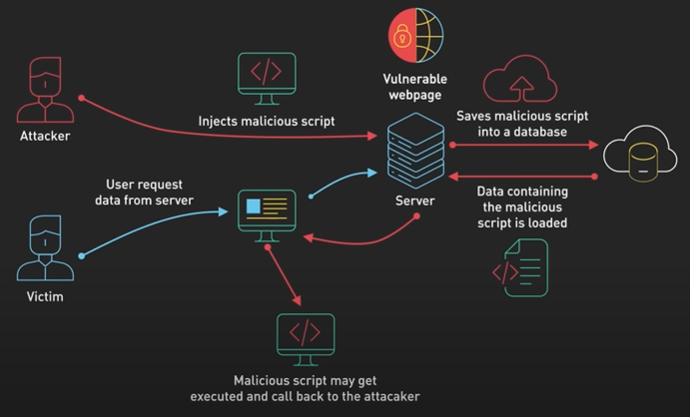

Open Web Application Security Project - OWASP provides some guidelines on how to store password.

Using modern Hashing function. It is one way it is impossible to decrypt hash.

There are some common legacy hashing function like MD5, SHA-1 are fast. They are less secure and should not be used.

Another way - SALT the password meaning add a unique random generated string in each password as a part of hashing process. Attackers will access the password using a technique called rainbow tables and database locks hacker can crack the password in seconds.

Password provided by the user and then added a randomly generated salt and then you make a hash function and value of this entire thing. The hash is stored in the database along with the salt.

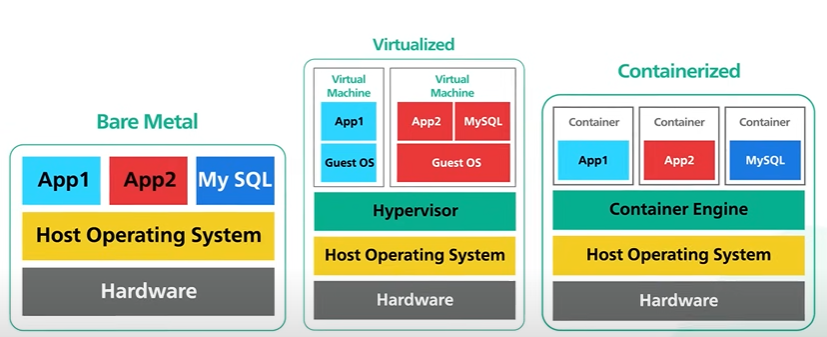

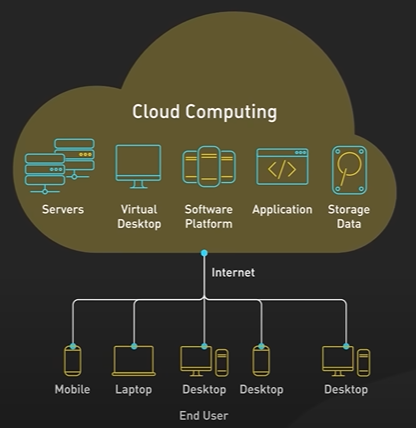

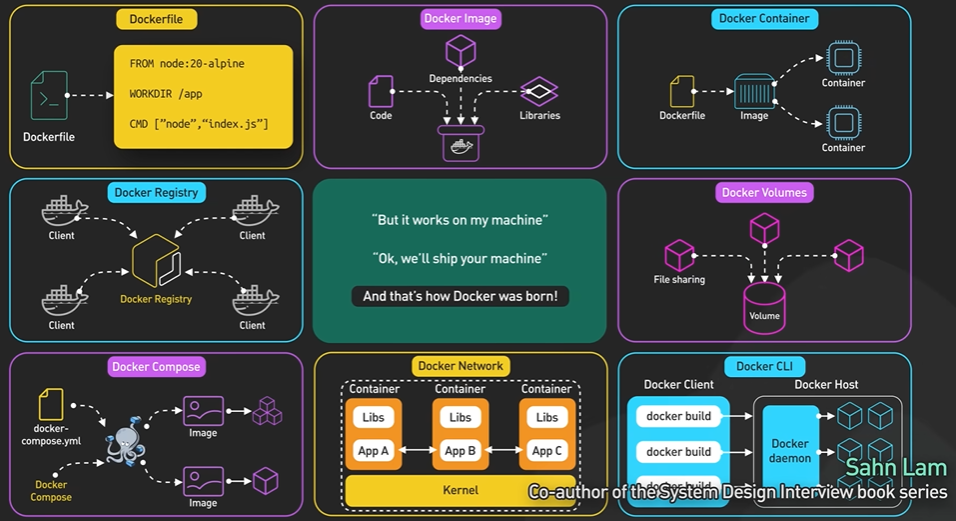

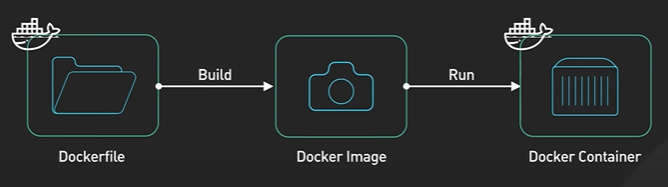

Bare Metal, Virtual Machines and Container.

Bare metal server is a computer that is a single tenant only. Bare metal gives all the hardware resources and the software to run. Bare metal server are physically isolated and the isolation helps in not getting impacted by one network bandwidth with the other high CPU use.

Bare metal is expensive hard to manage hard to scale.

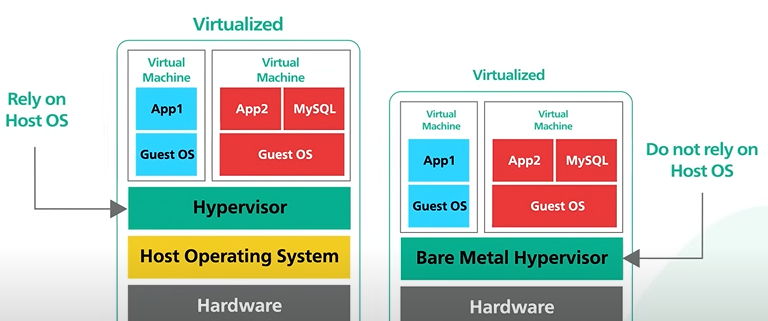

Virtual machine is an emulation of a physical computer. Virtual machine run on a single piece of a bare metal hardware On top of that there is the host operating system and on top of that there is a special software known as the hypervisor.

Hypervisor monitors the virtual machine it creates a layer over the hardware so that multiple operating system can run along side each other. Each virtual machine has its own guest OS. On top of the operating system runs the application.

Bare Metal Hypervisor is different that Bare Metal Hardware.

Bare metal hypervisor controls the hardware directly with relying on the host operating system. It gives the hypervisor full control over the hardware and provide high performance.

Hardware that supports Bare metal hypervisor are expensive.

Virtual machines are cheaper to run and share the same hardware allowing much high resource utilization.

Virtual machine is vulnerable for the noisy neighbor problem where one system and application will get impacted by other system being using 100% CPU or 95% network bandwidth. Virtual machine running on the same bare metal hardware shares the same physical cpu core which makes it vulnerable for any attacks.

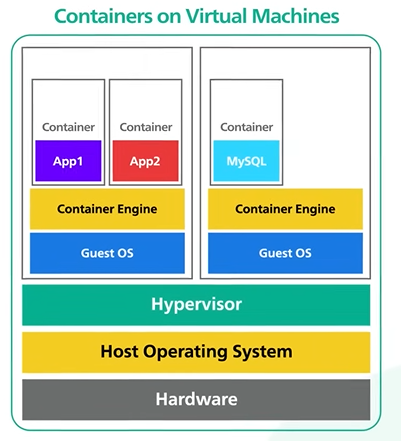

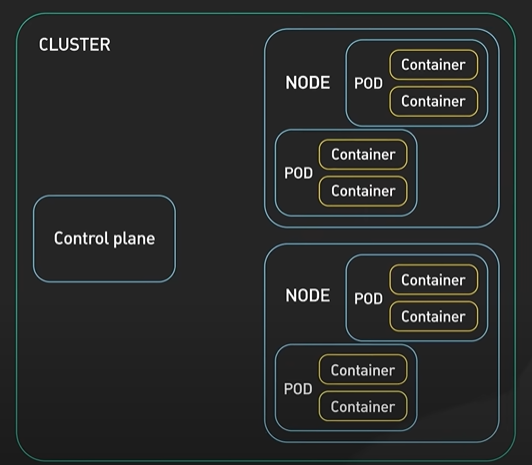

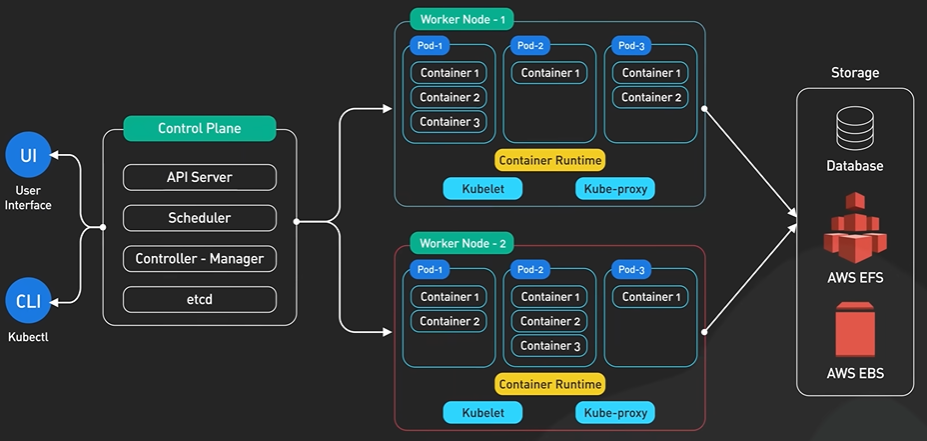

Container is a lightweight and standalone application with all its dependencies. Continuation is a lightweight version of virtualization.

Here there is a bare metal hardware with a host operating system But instead of virtualizing the hardware with the hypervisor like in virtualization we virtualize the OS with a special software called the container engine. On top of this container engine they run the container which is an individual applications isolated from each other.

Containers is scalable and portable and there are lightweight to run on the virtual machine. A Bare Metal server can hold more Containers than virtual machines. Containers are easy to deploy.

They share the same underlying operating system and the isolation are the operating system level. Containers are exposed to wider class of security vulnerability.

We can run containers inside the Virtual machine and it will increase the security.

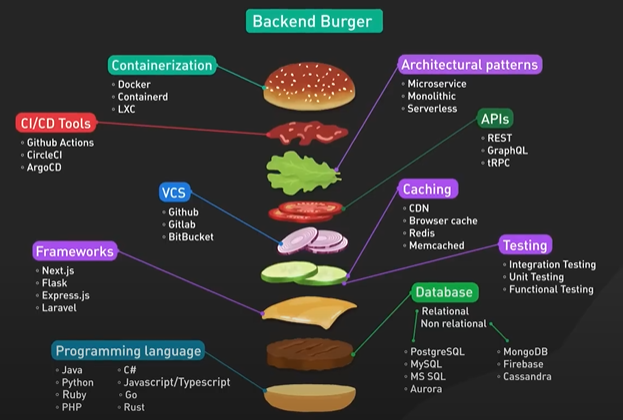

After container there is the serverless and edge computing. The server less architecture using the GCP AWS.

Design a location Based System - Yelp.

Designing a proximity service meaning designing the best restaurant nearby or mapping to the closest gas station.

Functional requirement.

Given a user location in the search radius as input. Return all businesses within the search radius.

Business owner can add delete update a business. The changes doesnt need to be real time it can appear after one day.

User can view the details of the businesses in that page.

Non-functional requirement.

DAU - 100M.

Business - 200M.

The latency should be low users should be able to find the nearby businesses quickly.

The services should be highly available it should be able to handle traffic spikes during peak hours.

Assumptions.

DAU - 100M.

Business - 200M.

The daily active users are making around 5 queries per day.

100 Million DAU * 5 searches per user /100000 seconds in a day = ~5000.

The database storage needed to store 200 million of data to estimate that we need to understand the schema design.

High-Level Design.

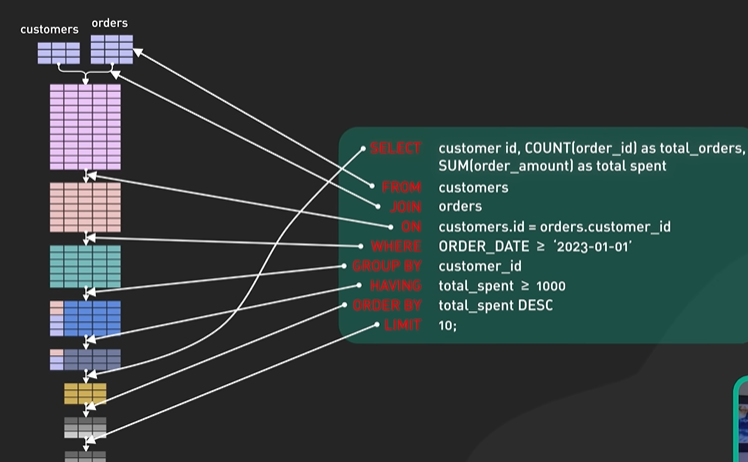

API design - Restful.

There were two broad api categories one to search the nearby businesses and one to get the details of the business.

GET /v1/search/nearby

|Field|Description|Type| |—-|—-|—-| |latitude|Latitude of a given location.|double.| |longitude|Longitude of a given location.|double.| |radius|Optional. Default is 5000 meters (about 3 miles)|int|

{

"total": 10,

"business": [{business object}]

}

We have shorten the api and can include pagination.

API to manage the business object.

GET /v1/businesses/:id Return detailed information about the business.

POST /v1/businesses Add a business.

PUT /v1/businesses/:id Update details of a business.

DELETE /v1/businesses/:id Delete a business.

The store required for the business table -

200 Million business * 1 KB = 200 * 10^6 * 1KB = 200GB.

The storage required for the nearby search table - 200 million * 24 bytes = ~ 5GB.

This database is very small so when the database is small then we can have wide variety of design and also we can use in memory database as well.

High Level Design.

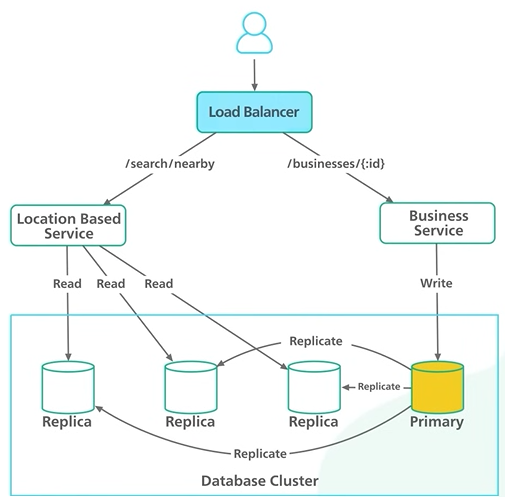

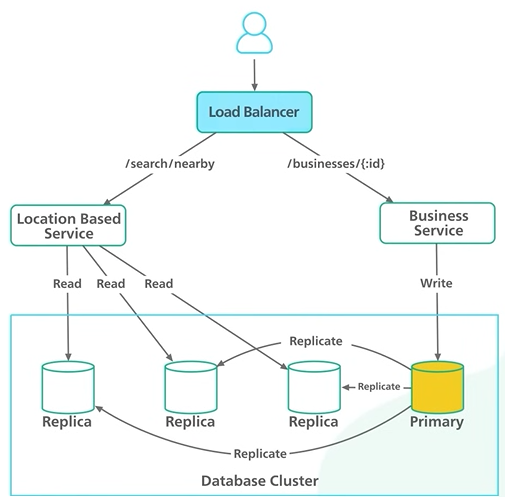

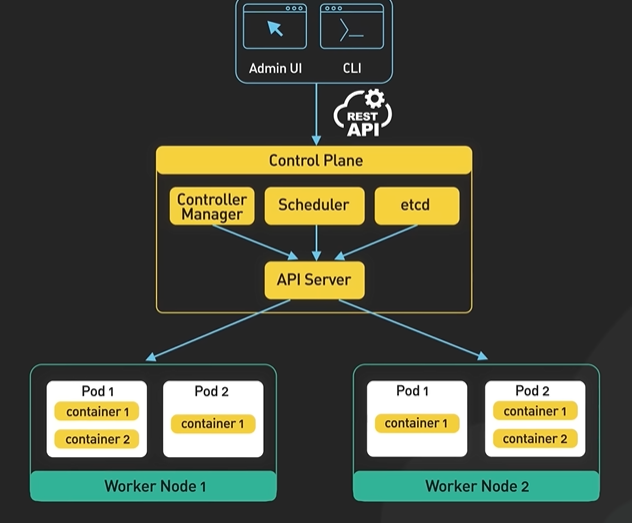

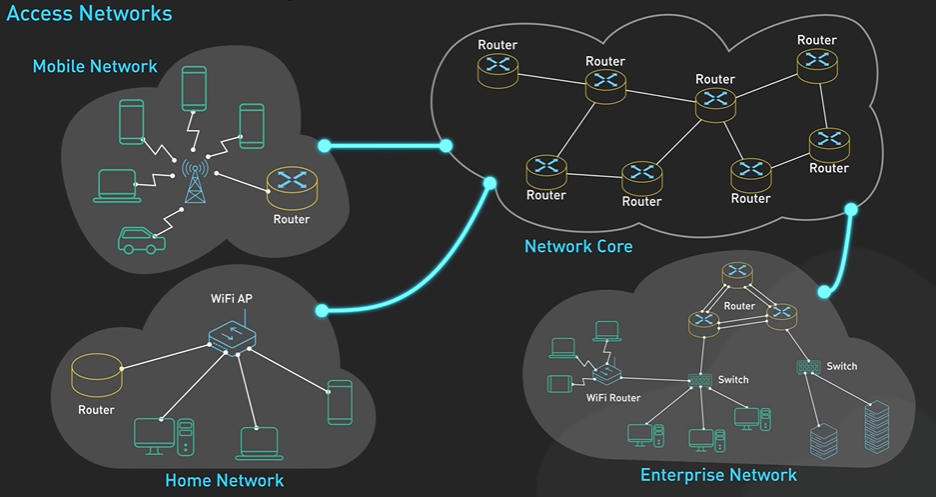

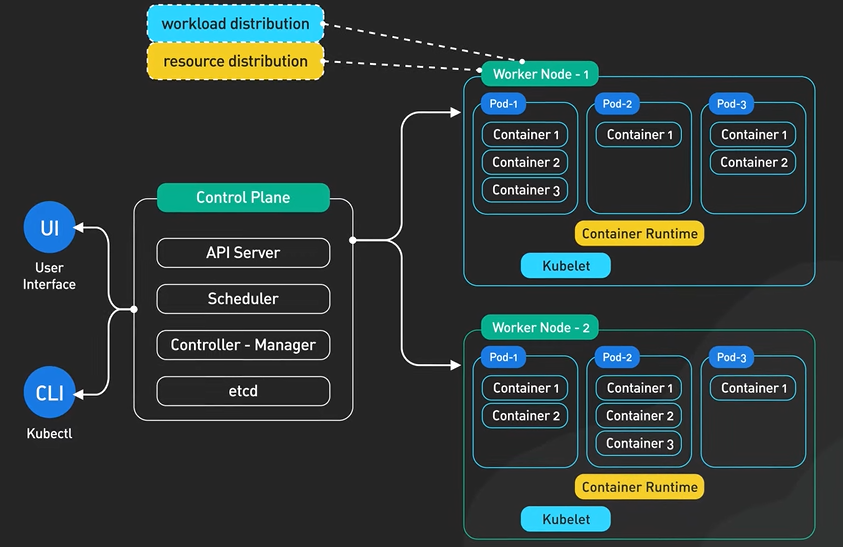

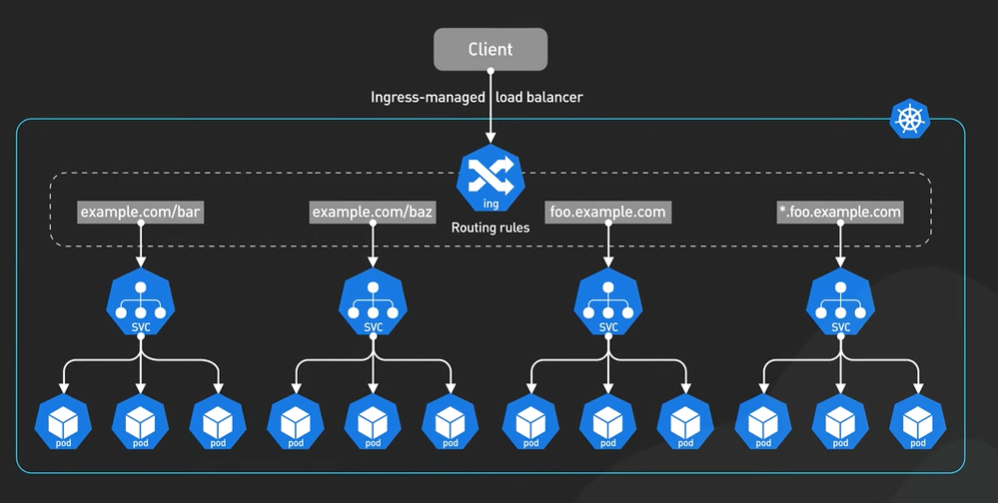

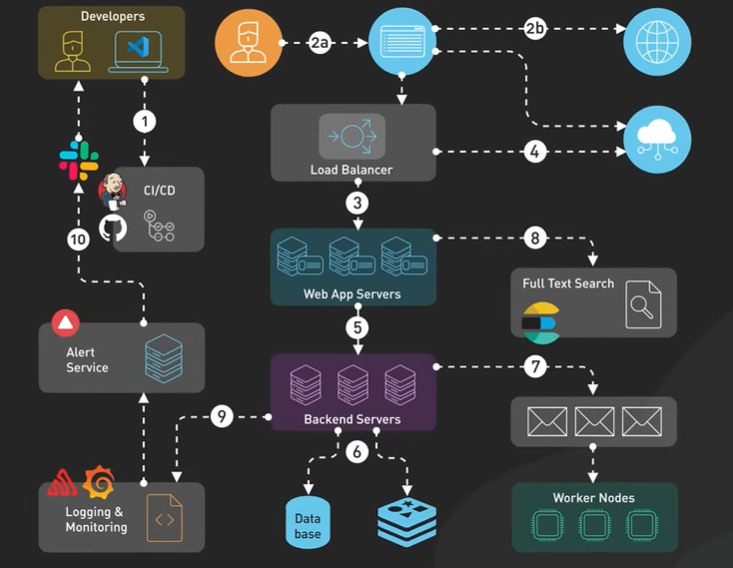

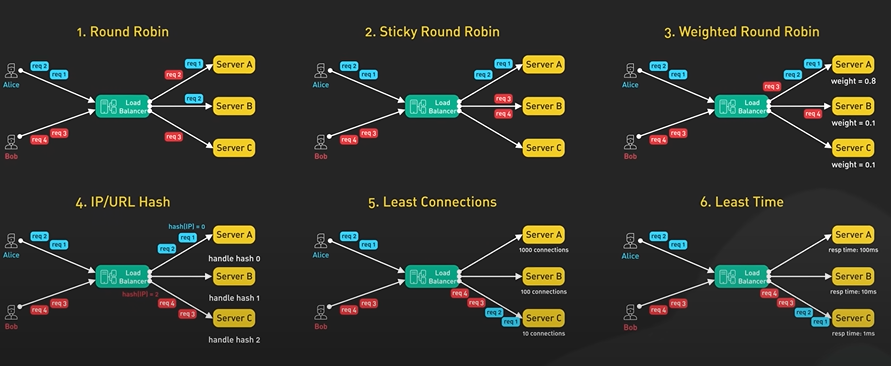

The load balance distributes incoming traffic across two services based on the api routes.

Boat services are stateless deploying stateless services behind a load balancer is a common design.

Company can use other setup to distribute traffic to services it could be envoy in a kubernetes cluster or API gateway on AWS.

The location based service has few characteristics -

Read heavy with no write request at all.

The QPS is high 5000 qps was our earlier estimate.

Service is stateless. It should be easy to scale horizontally.

The business service manages the business details and the qps of the update is not high.

In the recruitment we have understood the changes can reflect after sometime.

The read will be high and the qps is going to be high during peak hours. The data will not be changed frequently so it can be easily cache.

The system is read heavy.

Read qps is much higher than the writes qps.

The writes don’t need to be immediate.

On this observation we can say that the database cluster can be used with the primary secondary setup.

The primary database will be handling all the right requests and the read replica handles the heavy read request.

Twitter will be first stored into the primary and then it will be replicated to the other database there can be a bit of delay in the updated and consistent data but again the changes does not need to be reflected in real time.

Design Deep Dive.

Database to do the location based search - Geospatial database store and query data in geometric space like location data. Some example of these type of data are Redis GEOHASH and Postgres PostGIS extension.

Understanding how the db works - discuss the common algorithm behind the geospatial index that powers the database.

Intuitive and inefficient way - Draw a circle and find the businesses within the circle.

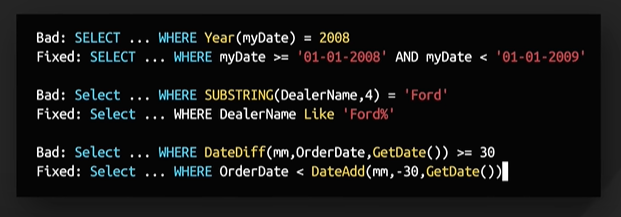

SELECT business_id, longitude, latitude

FROM business

WHERE (latitude

BETWEEN {:my_lat} - radius AND {:my_lat} + radius)

AND

(longitude BETWEEN {:my_long} - radius AND (:my_long} + radius)

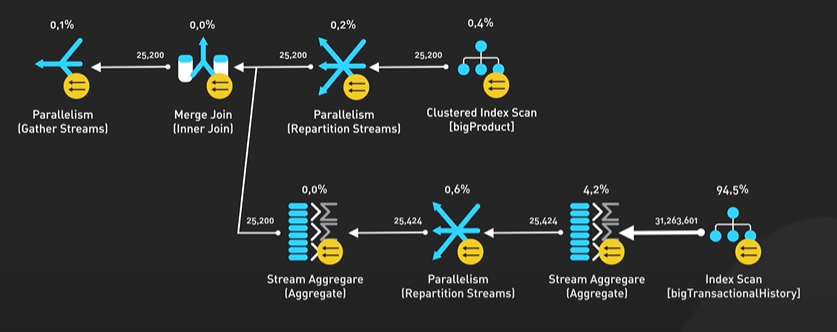

The query is not efficient and it search in 200M data.

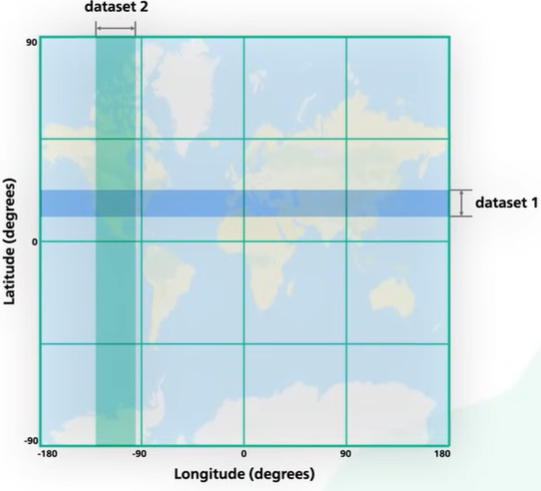

One way to index the latitude and longitude. Data returned for each dimension is huge. We can retrieve all businesses within a latitude range or longitude range.

To fetch businesses within a search radius we need to find the intersection of those ranges. It is inefficient and each dataset contains a lot of data.

The problem with this approach is the index can increase the search in one of the two dimensions.

The follow up is can we map two dimension data into one dimension so we can build a single index on it.

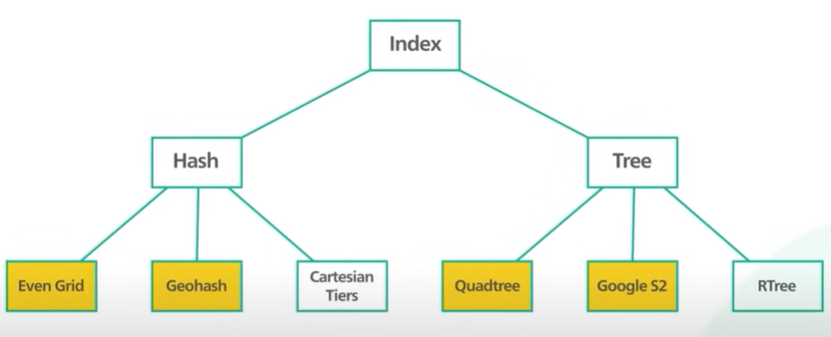

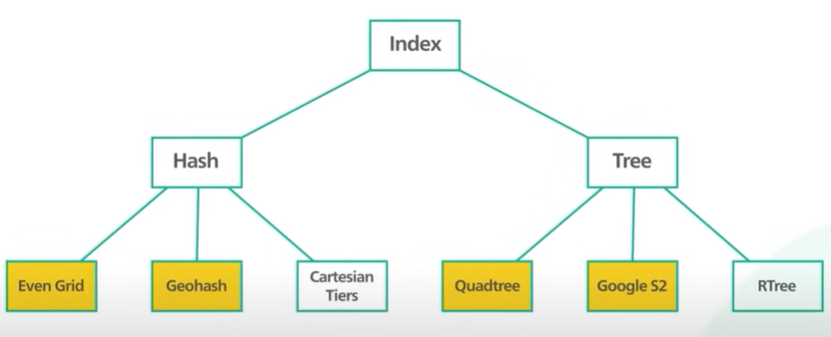

There are 2 approaches in Geospatial indexing - Hash.

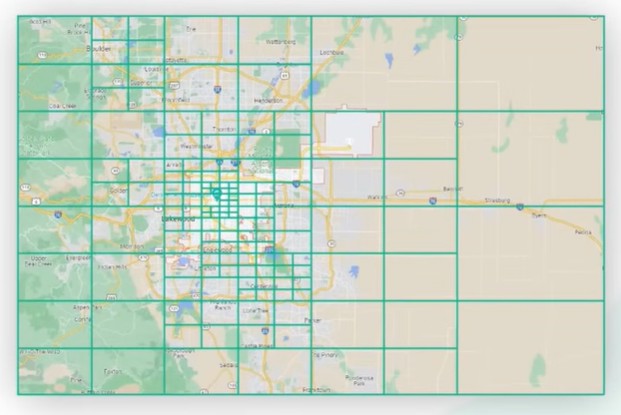

One way - evenly divide the grid and get the business within the grid. The problem is in few area the return of the businesses will be high and in few areas the business are low.

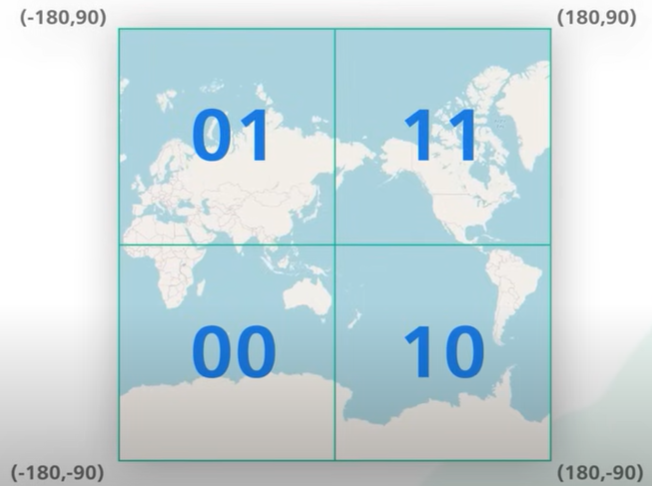

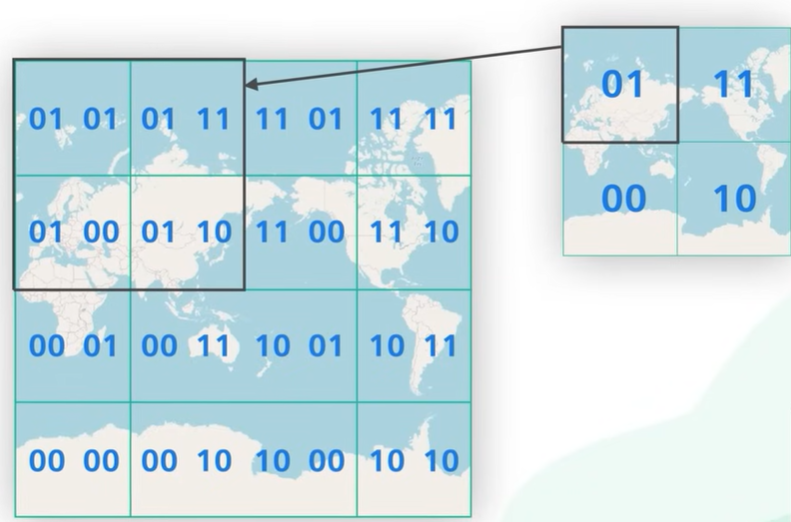

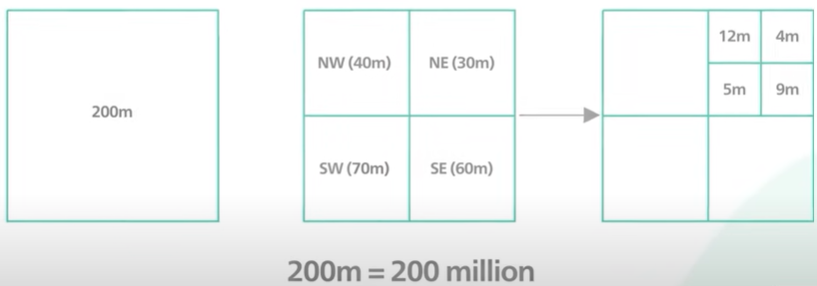

Geohash helps as it stores the two dimensional data into an one-dimensional string of letters and digits. It divides in four quadrant along the prime meridian and equator. Four quadrants are represented by two bits.

Each part is again divided into grids. The bits in the sub-grids are appended to the existing bits.

It repeats the subdivision and keeps adding more bits to the Geohash. It stopped the subdividing when the sub grid reaches the specific size.

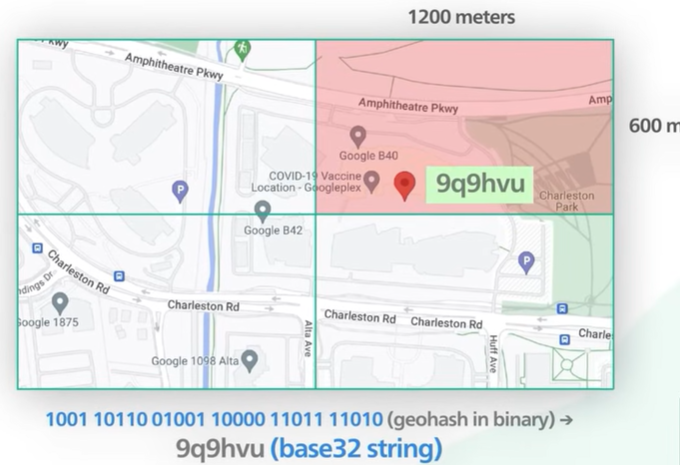

The example of a grid that contains the Google headquarter Institute of a long one and zero to represent the Jio hash design coded in a base 32 string.

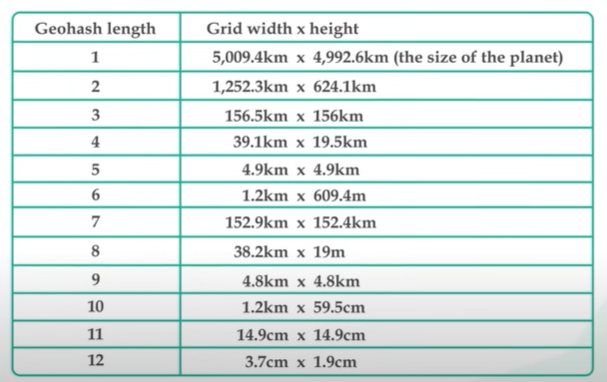

The base32 string shows the size of the grid the idle size should be 4 5 or 6. If the value is higher than 6 then the grid size is too small and if it is less than 4 then the grid size is too big.

How do we choose the right precision given a search radius?

We find the minimal geohash length that covers the whole circle. For example if the radius is 2 km the Geohash length should be 5.

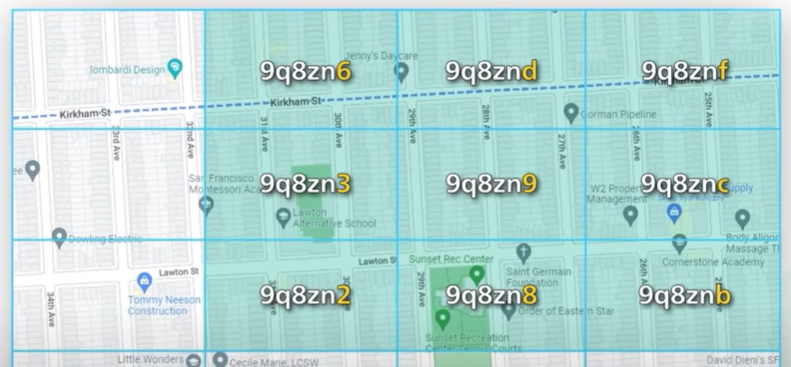

Another property Geohash is a string and searching all business within a geohash is very simple.

There are some cases when did you hash might not work properly. These edge cases have to do with how the boundaries are handled.

When two geohash strings share a long prefix we know they are close. The grid 9q8zn shares the same prefix.

The reverse is not true. Two places could be right next to each other but have no shared prefix a the grid on the either side of the equator or the prime meridian are in different halves of the world. Example two cities in France can be nearby but they are not sharing any common prefix.

Another boundary issue can be the two locations can have long shared prefix but they are in different geohash.

The common solution to bowl of these issues to fetch businesses is not only within the current date but also 8 grids surrounding it.

Calculating the neighboring geohash is easy and done in constant time using the library.

Implementing the location best service using a tree based indexing.

The common tree index is quadtree and googles2.

QuadTree.

A Quadtree data structure that partition a two dimensional space by recursively subdividing it into 4 quadrants.

The subdivision stops when the greet meets certain criteria.

In out case the criteria can be keep dividing unto the number of businesses in a great his no more than similar say 100.

A keyboard for Quadtree or any other tree-base index is that it is an in memory data structure and not a database solution.

It means that the index is built by our own code and runs on the location-based servers.

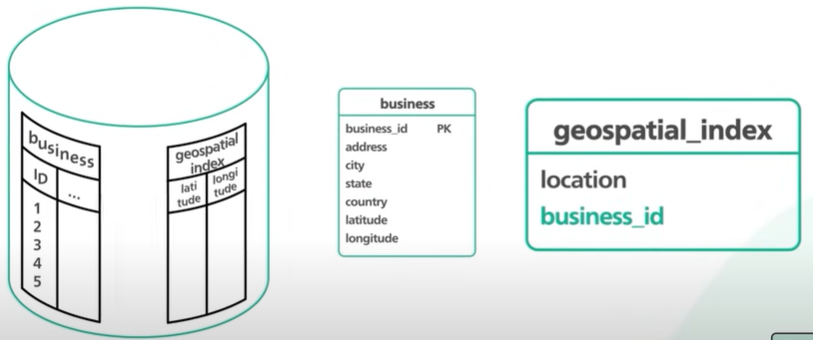

We use the Geohash as the geospatial index to speed up the location search.

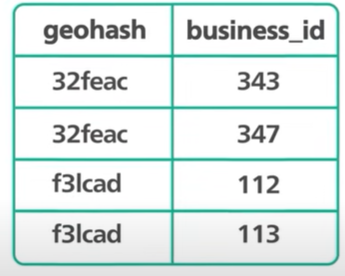

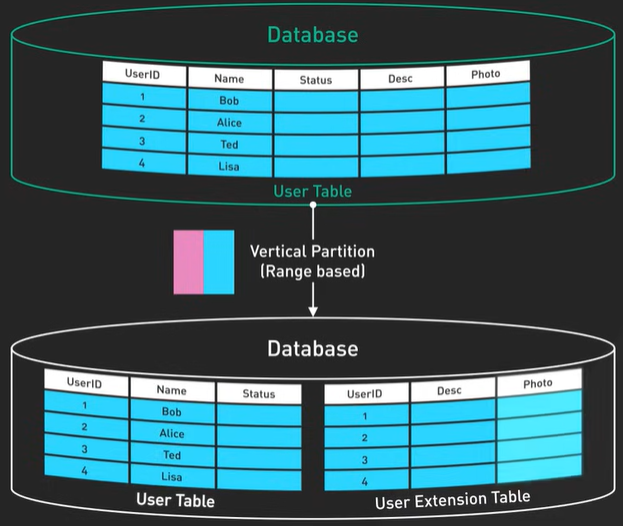

How do we structure the geospatial_index table?

It should contains two columns - geohash and business Id. Any relational db can handle geohash. The Geohash column contains the geohash of the right precision for each business.

Converting the latitude and longitude to the geohash is easy and libraries can do it.

Two things we should discuss with the table schema - Many business would share the same geohash. It means different business Ids within the same Geohash are stored in different database rows. The geohash and business id columns together form a compound key.

With the compound key, it makes the removal of a business from the table efficient.

In our application we are only interested in the geohash with precisions 4 to 6. They correspond to different search radii from 0.5km to 20 km.

The geohash column will all have the precision of 6. We use the LIKE operator in SQL to search for shorter prefix length.

Using the query we find everything that is within the geohash of ‘9q8zn’.

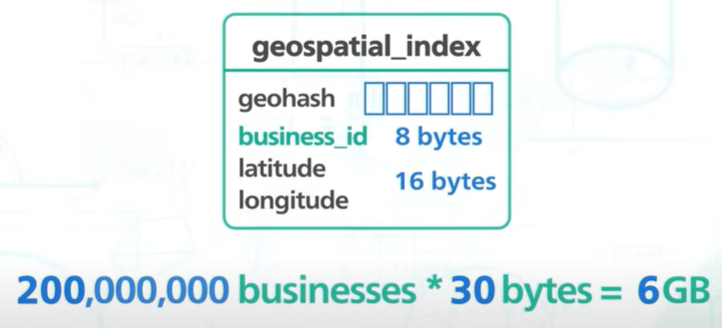

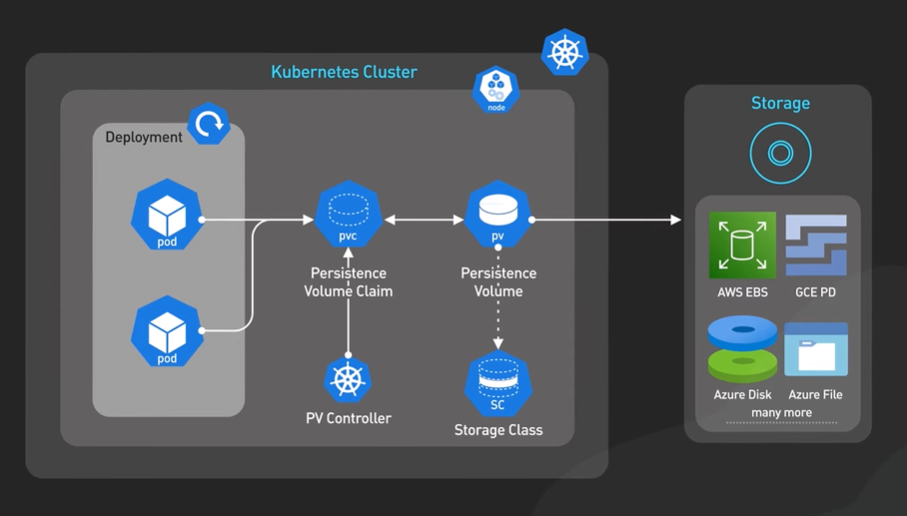

Scale the geospatial index table.

We do not need to scale more than one db table. The column geohash is 6 bit long and business_id is 64 bit like 8 bytes. We can also store the latitude and longitude so it is easy to calculate the distance of each business from the user and its about 16 bytes. Each row is 30 bytes.

200 million business the data is 6GB.

The database can be fit inside one server the read QPS is 5000 which is quite high.

The single db not have enough CPI or network bandwidth to handle all the read request.

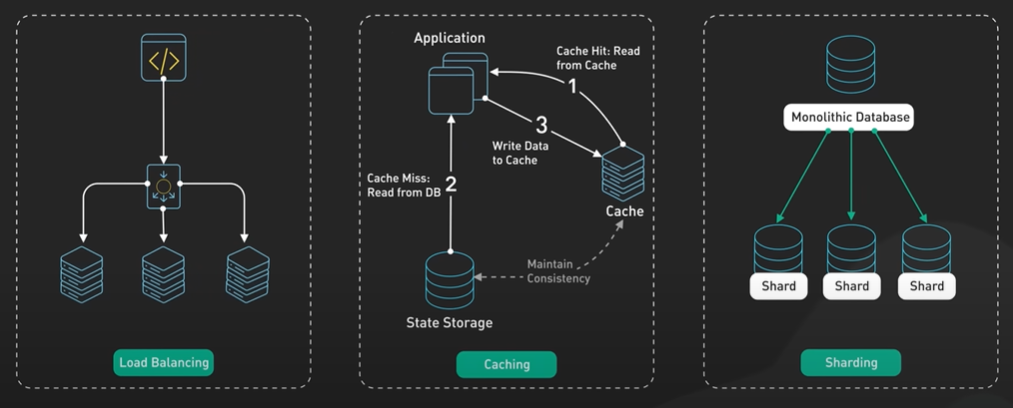

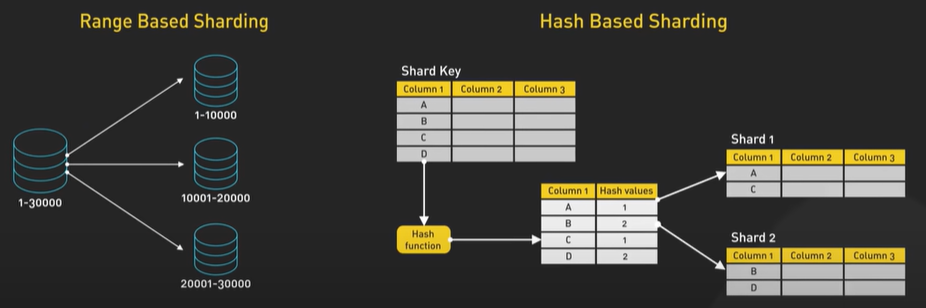

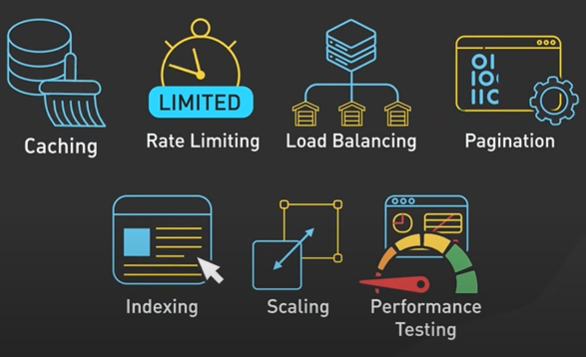

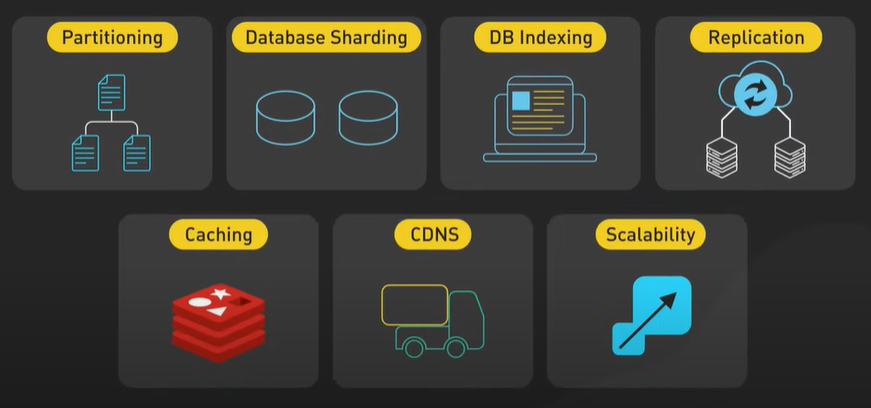

Increase the speed of read there are two ways - Read replica or sharding.

Sharding is complex. It requires sharding logic in application layer. In the application entire database can be in a single machine there is no reason to use shard.

The better approaches to use the read replica of the geospatial_index table to help the read load.

Do we need a caching model in our design?

Our workload is read heavy and the Geospatial data set is small.

The read performance is bottlenecked then we can use read replicas.

There is another dataset to design - business table.

The data set for the business table with 200 million businesses is in terabyte range. Modern hardware can perform it. The dataset size is on the borderline where sharding might make sense.

The update rate is low and the db is read heavy. We should be able to get away without sharding if we put a cache in front of it.

The cache will take most of the read load of the frequently accessed businesses and it should give the business table quite a bit of headroom to grow.

There are a lot of options for the business table and first one is to use single table and get the product grows and with the help monitoring we can react to the growth and usage pattern and decide later to either shard or add more read replicas or add cache in front of it.

Search workflow.

Bob tries to find the restaurant within 500 meters. The client sends the location and search radius to the load balancer.

The load balancer forward the request to the location based service.

Based on the location and search radius, the service finds the Geohashes precision that matches the search request.

500 meter maps to the geohash length of 6.

This service calculates the neighbouring Geohash. There will be a total of 9 Geohash to query against the geospatial index table.

The service sends a query to the database to fetch the business ids and the latitude and longitude pairs within those geohashes.

The service uses the latitude and longitude pair to calculate the distance between the user and the businesses and rank it and return the business to the client

UPI scan and pay.

https://youtu.be/XS8ACikD2qs?si=dt6TPUUopLTV4agv

This method of payment is available via digital wallet apps like Paytm, PayPal and Venmo by scanning a dynamically generated QR code at the point of sale terminal. How does it work? Let’s take a look. To understand how it works, we need to divide the “Scan To Pay” process into two parts. The first part is for the merchant to generate a QR code and display it on screen. The second part is for the consumer to scan the QR code and pay. Let’s take a look at the steps for generating the QR code. When we’re ready to pay, the cashier clicks the checkout button. The cashier’s computer sends the total amount and order ID to the payment service provider. The PSP saves this information to the database and generates a QR code URL. The PSP returns the QR code URL to the cashier’s computer. The cashier’s computer sends the QR code to the checkout terminal. The checkout terminal displays the QR code. All these steps complete within a second. Now the consumer pays from the digital wallet by scanning the QR code, and here are the steps involved. The consumer opens the digital wallet app and scans the QR code. The total amount to pay is displayed in the app after confirming the amount. The consumer clicks the pay button. The wallet app notifies the PSP that the given QR code has been paid. The PSP marks the QR code as paid and returns a success message to the wallet app. The PSP notifies the merchant that the consumer has paid the given QR code. This is how to pay using a dynamic QR code. It is dynamic because the QR code is generated for one-time use. It is also possible to pay by scanning the printed QR code at the merchant. This is called static QR code. It works a bit differently and we might cover it in a different video. If you would like to learn more about system design, check out our books and weekly newsletter. Please subscribe if you learned something new. Thank you so much, and we’ll see you next time.

Consistent Hashing.

Data distributed across multiple server and it is horizontal scaling.

Data should be evenly distributed in the server and can be done by hash function.

ServerIndex = hash(key) % N. N number of server.

Hash function return same value for the same key. When the number of server is same the hash function will return the same value for the key.

When the number of server change like the reduced or increased then the data stored in the server is arranged to other server. The new hash function will return new value.

Consistent Hashing main purpose is all object to stay assigned to the same server even as the number of object changes. Hashing the object key and also the server name. The object and the server name are used into the same hashing function to the same range of values.

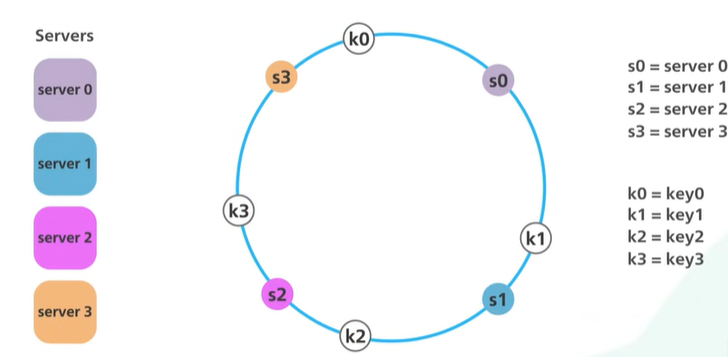

There is an array of x0 to xN and it is a hash range and the end of the array are connected making a circle.

The hash is used to map the data into the ring. The modulo is not used.

To locate the server for a particular object we go clock wise from the location of the object in the ring until a server is found.

k0 to s0, k1 to s1.

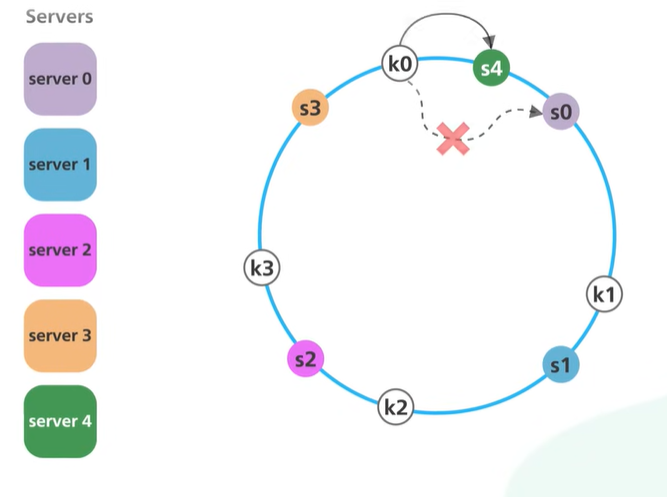

Another server added then only k0 will be mapped to s4. The other key are in their place.

With simple hashing when a new server is added almost all keys need to be remapped. With consistent hashing adding a new server only requires redistribution of a fraction of the key.

Drawbacks - When all server in a same place then all data will come to the same server.

When one server removed then the entire load come to the next server.

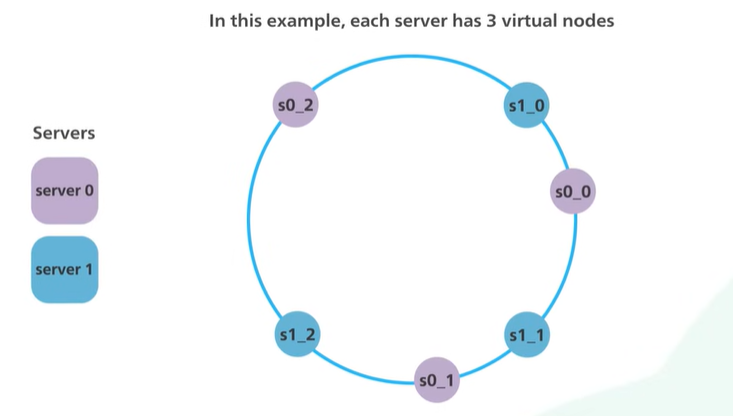

Virtual node helps in it and one server present multiple location in the ring. Each location represents the server in the ring.

The virtual node will be managed by the s0 and s1.

More virtual node the data is more balanced.

More virtual node meaning more storage to store the metadata of the virtual node.

NoSQL db like Amazon Dynamo DB and Apache Cassandra uses consistent hashing for the data partitions.

CDN uses Consistent Hashing to distribute the web content evenly among the server.

Load Balancer like Google Load Balancer use Consistent hashing to distribute persistent connections evenly.

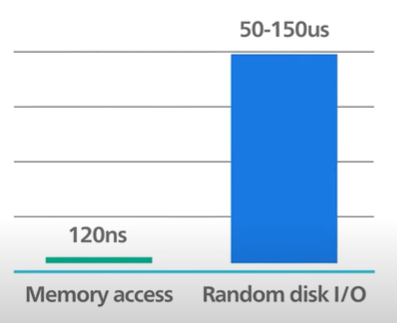

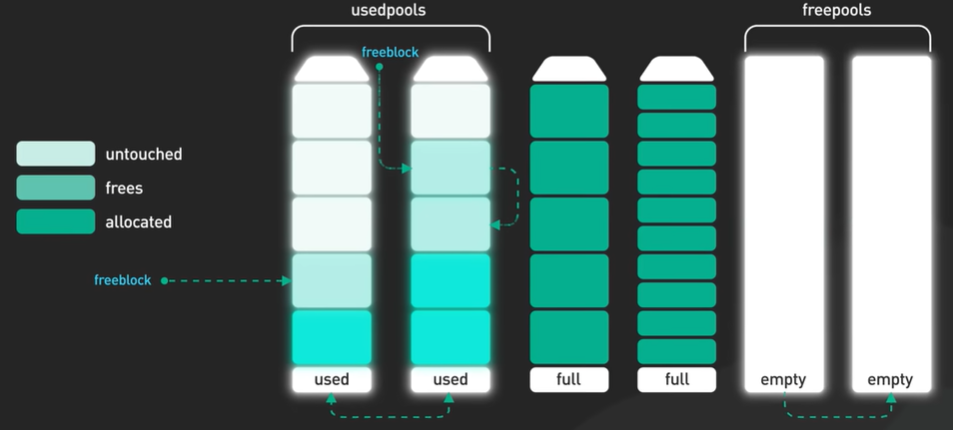

Why Redis fast?

Reddit is a very popular in memory database.

Redis uses RAM and not disk.

Memory access is faster than Random disk IO.

Pure memory gives the axis of high read and write throughput and low latency and the trade off is the data set cannot be larger than memory.

Code wise in memory data structure is far more easy to implement than on disk.

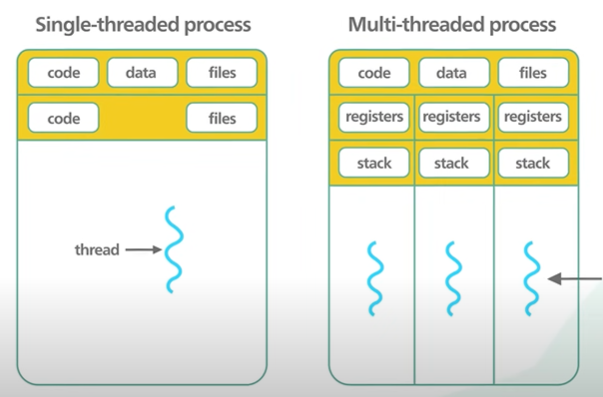

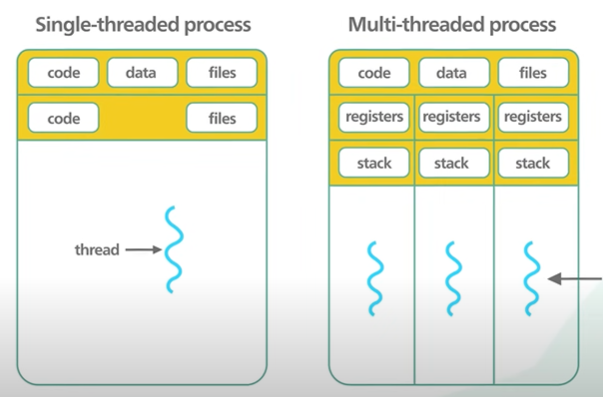

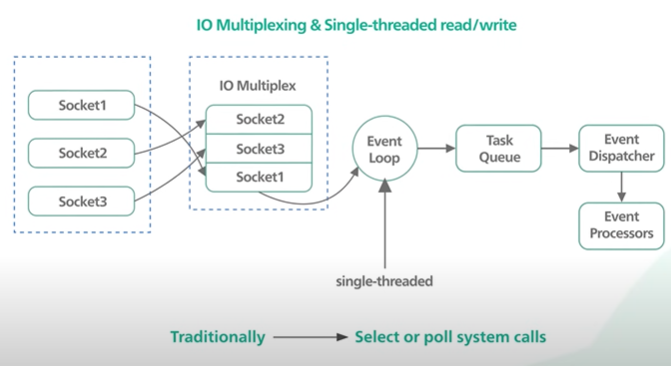

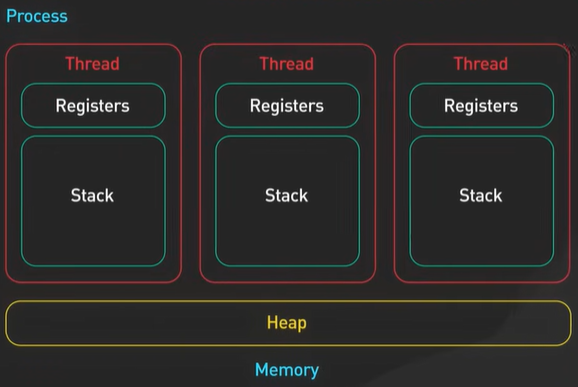

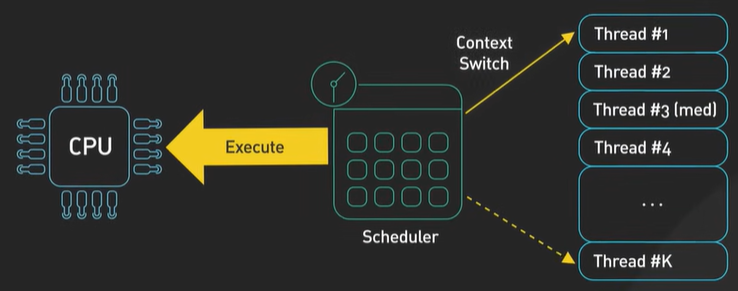

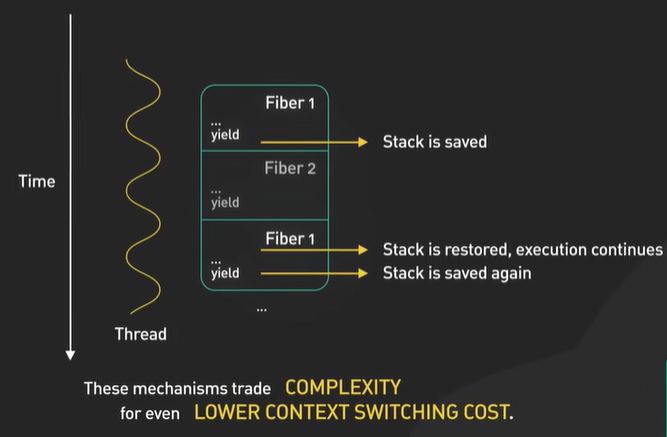

Another reason Redis is fast because it is single threaded.

Multi threaded application requires locks and other synchronisation mechanism.

How does single threaded code base handle thousands of requests incoming and outgoing ? Won’t the thread gets block waiting for each request to get complete individually?

It’s the male function of the IO multiplexing. In multiplexing operating system allows a single thread to wait on many sockets.

On Linux the Epoll is a performant variant of IO multiplexing that support thousands of connection in constant time.

One drawback of the single threaded design is that it does not leverage all the CPU course available into this modern hardware system.

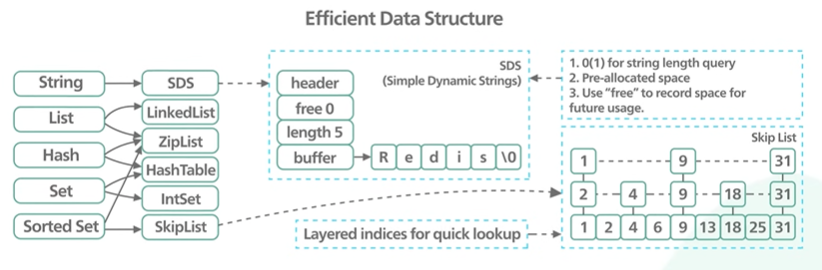

Another reason of red is being fast is that it can use the low level data structure like linked list, HashTable and Skiplist without being thinking about how to persist them to the disk efficiently.

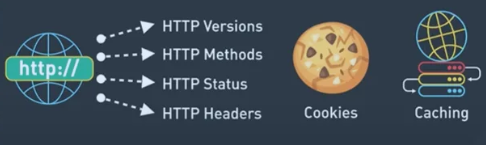

HTTP1 HTTP2 HTTP3.

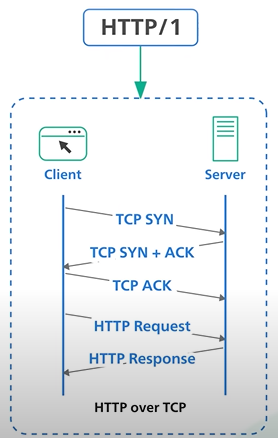

HTTP1.

Every request to the same server requires a separate tcp connection.

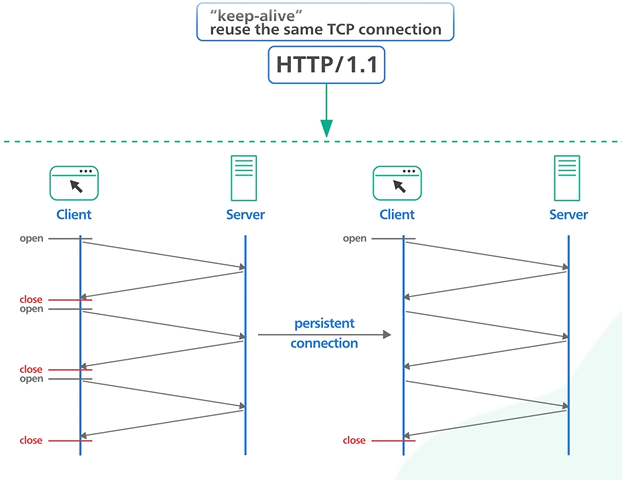

HTTP 1.1

Followed the same TCP connection. It follows the “keep-alive” mechanism and reuse the same tcp connection so that the connection can be used for more than a single request.

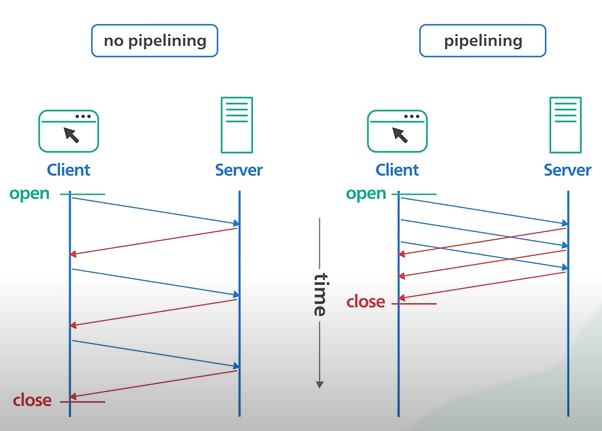

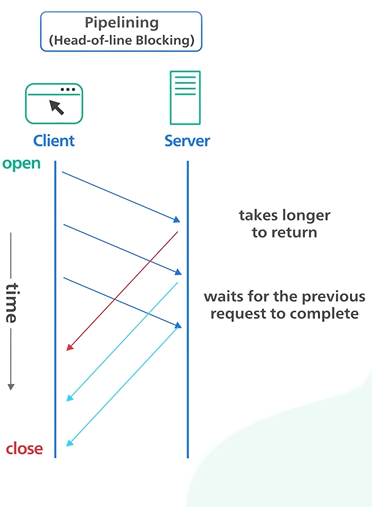

HTTP 1 .1 allowed the HTTP pipelining. It allows client to send multiple request before waiting for each response.

The response must be received in same order as it is send. It was tricky to maintain and the support removed from many servers.

When one packet lost all subsequent request are impacted.

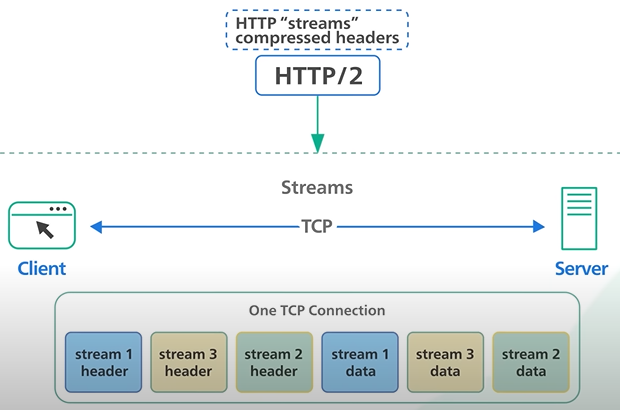

HTTP 2 each comes in stream. It solves the Head of line issue in the application layer but the issue still exists in the Transport layer.

HTTP 2 introduces the PUSH capability to the server when the client new data is available without the client to poll.

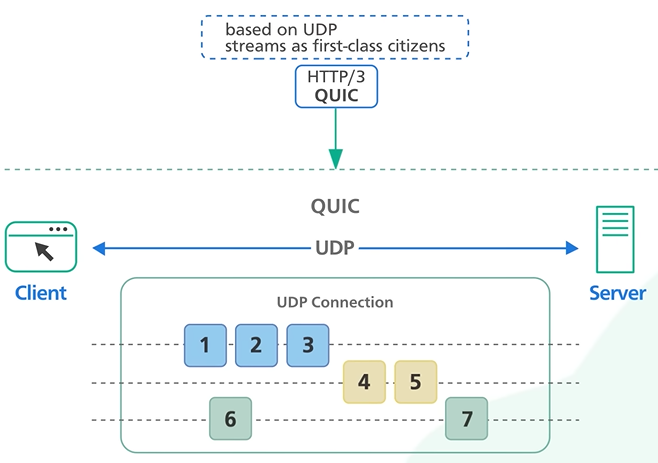

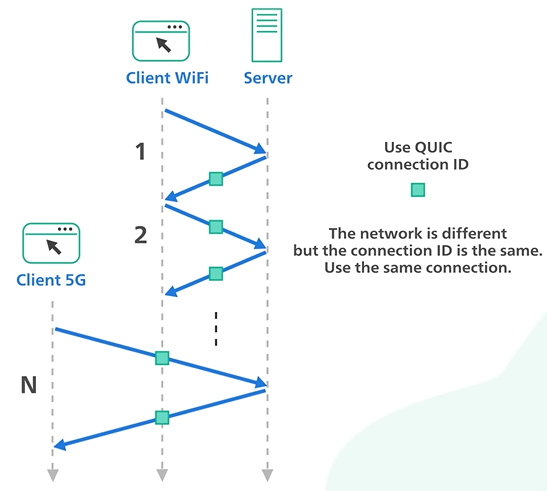

HTTP 3 uses QUIC protocol in the transport and not the TCP. It is based on UDP and consider stream as the first class citizen.

QUIC stream shares the same quic connection so no handshake required. It delivers independently and packet loss will not effect others.

The QUIC uses the Connection Id and the connection to move from the IP address and network quickly.

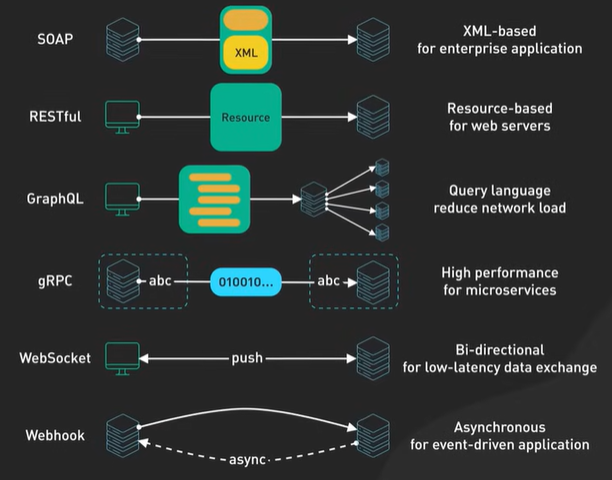

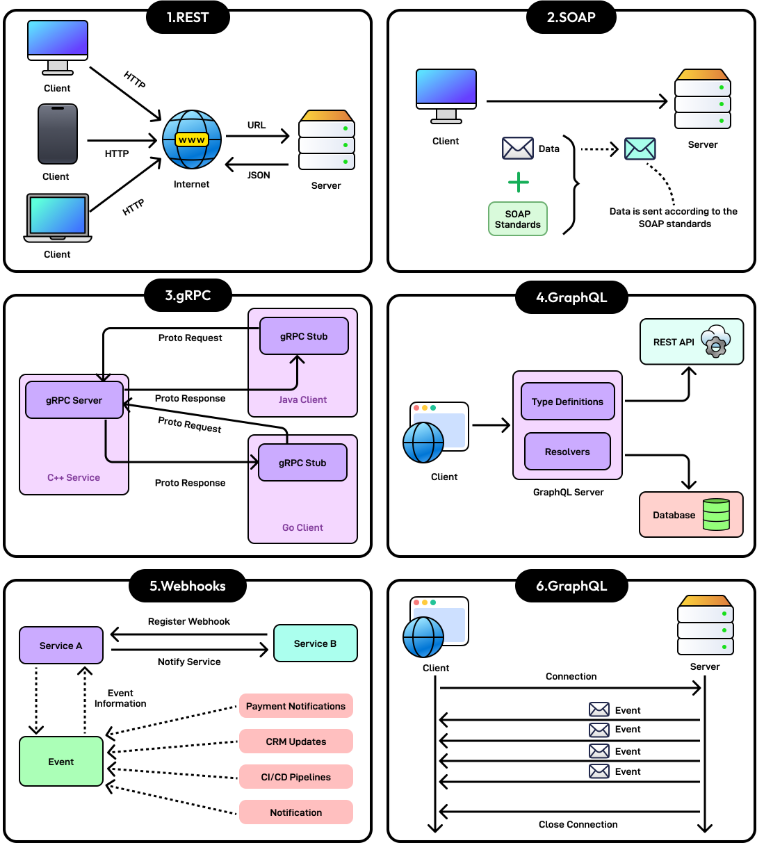

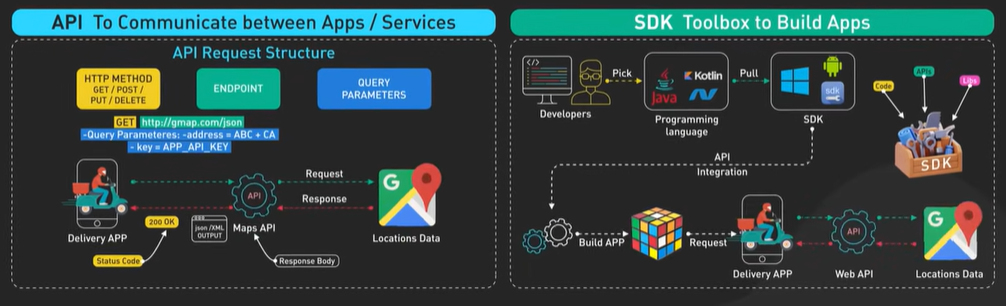

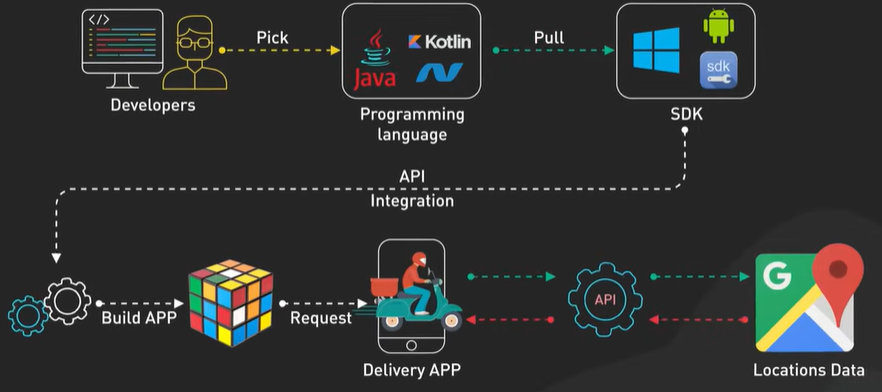

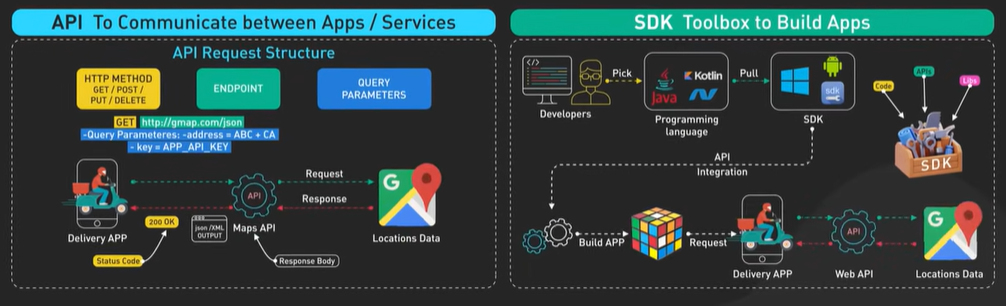

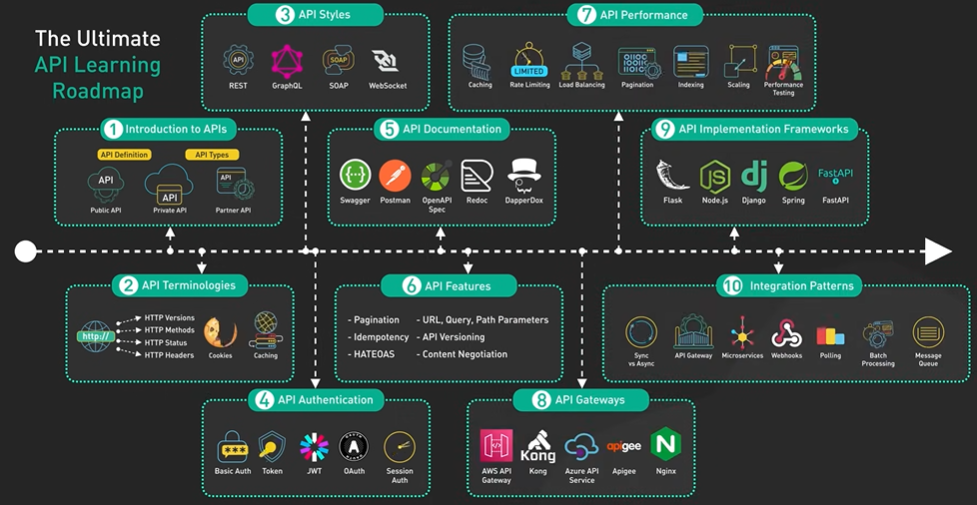

What is REST API.

API stands for Application Programming Interface. It is a way for two computers to talk to each other. The common API standard used by most mobile and web applications to talk to the servers is called REST. It stands for REpresentational State Transfer.

It is a new set of rules that has been the common standard for building web API since the early 2000s. An API that follows the REST standard is called a RESTful API.

Rest Api Rules - Uniform Interface, Client-Server, Stateless, Cacheable, Layered System, Code on Demand.

A RESTful API organizes resources into a set of unique URIs, or Uniform Resource Identifiers. The URIs differentiate different types of resources on a server.

Here are some examples. The resources should be grouped by noun and not verb.

An API to get all products should be /products and not /getAllProducts.

A REST implementation should be stateless. It means the two parties don’t need to store any information about each other, and every request and response (cycle) is independent from all others.

There are two final points to discuss to round out a well-behaved RESTful API.

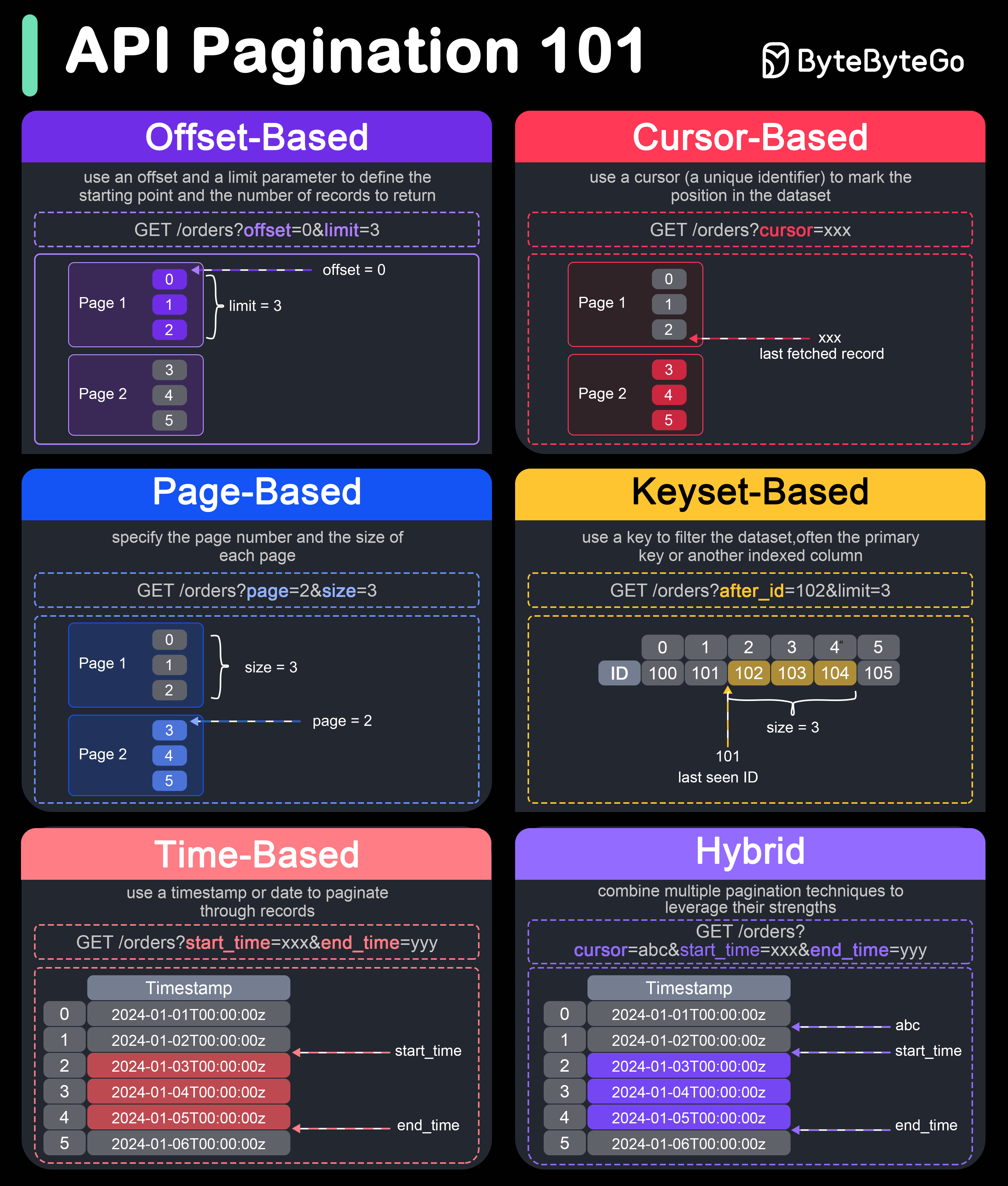

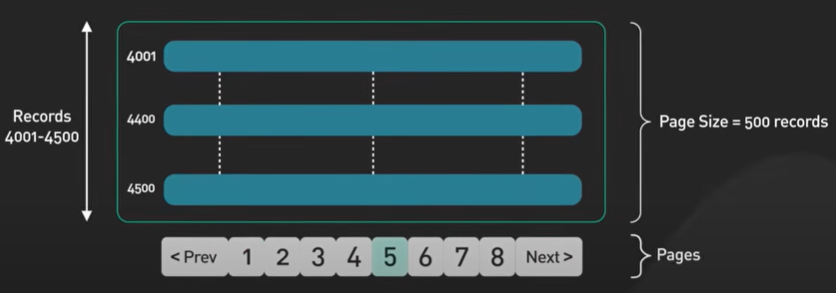

If an API endpoint returns a huge amount of data, use pagination. A common pagination scheme uses “limit” and “offset” as parameters.

Example - products?limit=25&pffset=50

Versioning of an API is very important. Versioning allows an implementation to provide backward compatibility, so that if we introduce breaking changes from one version to another, consumers can get enough time to move to the next version.

The most straightforward is to prefix the version before the resource on the URI v1/products.

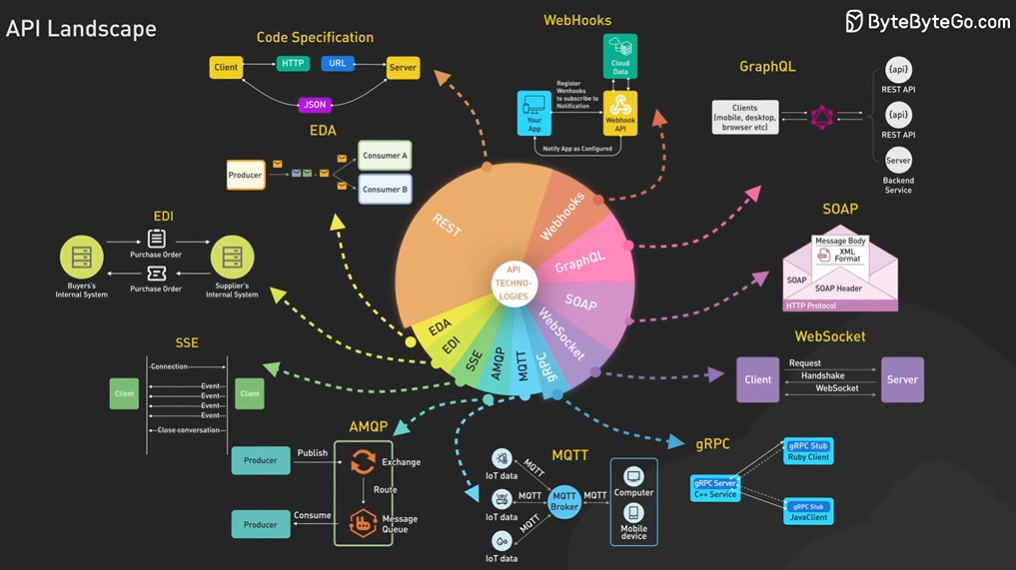

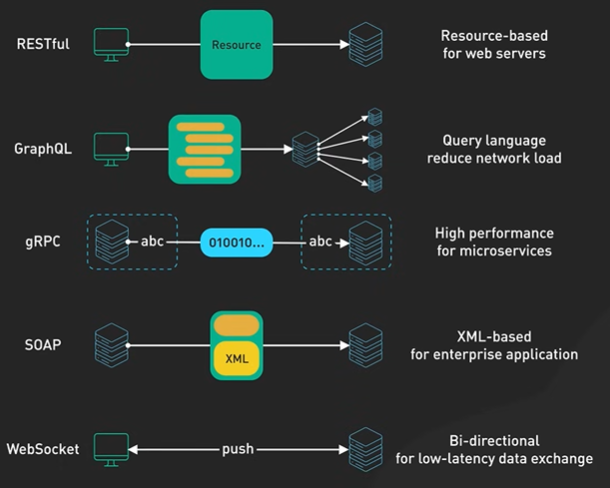

There are other popular API options like GraphQL and gRPC.

NOSQL LSM Tree.

NoSQL Database like Cassandra became very popular. The secret sauce for the NoSQL Db is the Log Structure Merge Tree.

LSM Tree is optimized for fast write.

How data is store in a relational Database.

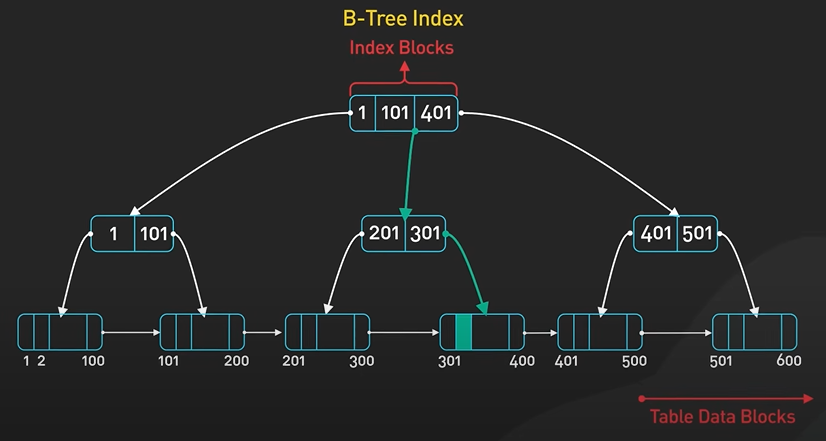

In relational database cities generally done by B Tree and B Tree is optimized for reads.

Updating the B Tree relative to expensive as it involves random IO and include updating multiple pages on disk. It limit how fast a B Tree can ingest data.

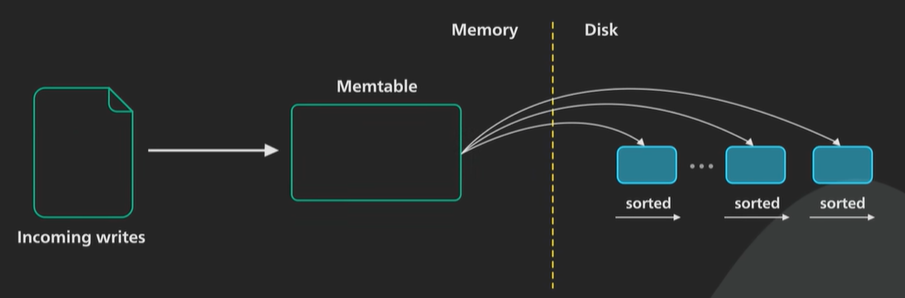

LSM works differently, writes are batched in memory as they arrive in a structure called a memtable. A memtable is ordered by object key and is usually implemented as a balanced binary tree.

As a memtable reaches a certain size it is flushed to disk as an immutable Sorted String Table.

An SSTable store the key value pair in a sorted sequence. The write are all sequential IO. Fast on any storage media.

The new SSTable becomes the most recent segment of the LSM tree as more data comes in more and more of this immutable SSTable are created and added to the LSM tree. Each one representing a small chronological subset of the incoming changes.

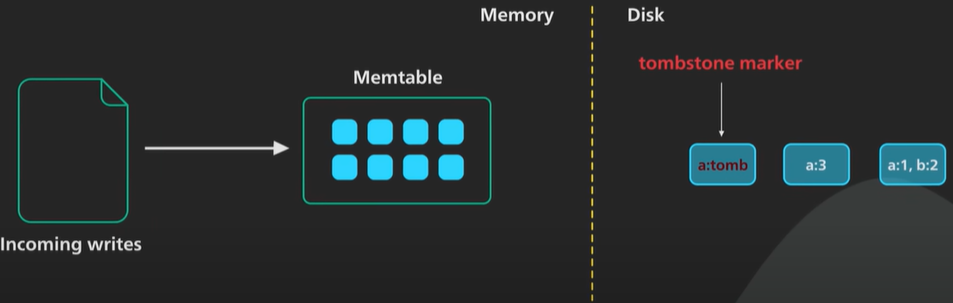

Since SSTable is immutable and update to an existing object key does not overwrite an existing SSTable.

Instead a new entry is added to the most recent SSTable which supersede any entries in the old access table for the objective.

Deleting an object in SSTable we cannot mark anything in the sustainable as deleted. To perform a delete it adds a marker called a tombstone to the most recent SSTable for the object key.

When we get the tombstone on read we known that the object has been deleted. It is a bit unintuitive that a delete takes up extra space.

To serve a read request we first try to find the key in the memtable then in the most recent SSTable in the LSM tree then the next SSTable.

Since the sustainability sorted the lookup can be done efficiently.

The number of SSTable grows it would take an increasingly long time to look up a key. As the SSTable accumulate the more outdated entries as keys are updated and tombstones are added. It takes disk space.

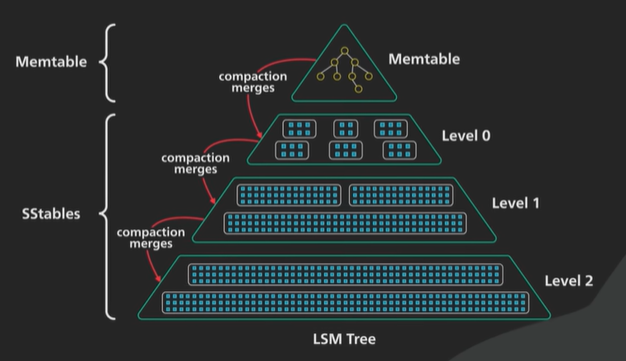

To fix the issues there is a periodic merging and compaction process running in the background to merge SSTable and discard outdated or deleted values.

SSTable is sorted so the merging and compaction process is simple and uses mergesort algorithm.

When SSTable are merged they are organized into labels. There are different strategies to determine where and how the SSTable are merged and compacted.

There are two board strategies Size tiered compaction and level compaction.

Size tiered compaction - Cassandra is optimized for write throughput.

Level compaction - Rock DB is more read-optimized.

Complexion keeps the SSTable manageable. This is similar organised in levels.

Each table gets larger as SSTable from the level above are merged into it.

Compaction contains a lot of IO.

A mistuned compaction could starve the system and slow down both read and write.

Optimization for the LSM tree.

There are many optimizations which tries to perform the read operations similar to the B Tree.

It look up on every label of the SSTable. searching is easy as it is done on the sorted data but then going through all of the data takes a lot of IO.

Many system contains a summary table in each level which contains the min max range of each disc block of every level.

Another issue to look up a key that does not exists. It looks all the eligible bocks in all the levels. Most system keeps a bloom filter at each level.

A bloom filter is a space efficient data structure that returns a firm no if the key does not exist. It help the system not to look at each level and it improves the IO system.

Bloom Filters.

Bloom Filter is a space efficient probabilistic data structure.

It would answer with a firm no and probably yes.

Use case can tolerate some false positives but not any false negatives a bloom filter could be very useful.

We cannot removes an item from a bloom filter it never forgets.

NoSql databases use bloom filter to reduce disk read for keys that don’t exist. An lsm tree based database searching for a key that doesn’t exist requires looking through many files and it’s very costly.

CDN like Akamai use bloom filter to prevent caching one-hit-wonders these are web pages that are only requested once.

Akamai data 75% of the pages at one-day-wonders using a bloom filter to track all the urls seen and only caching a page on the second request is significantly reduces the caching workload and increases the caching hit rate.

Web browsers like chrome used to use a bloom filter to identify malicious url. Urls was first checked against a bloom filter. It only performed a more expensive full cheque of the url if the bloom filter returned a probably yes answer. This is no longer used however as the number of malicious urls grows to the millions and a more efficient but complicated solution is needed.

Some password validators use bloom filter to prevent users from using weak passwords sometimes it’s wrong password will be a victim or a false positive.

The critical ingredient to a good bloom filter is some good hash functions these hash functions should be fast and they should produce outputs that evenly at randomly distributed, few collisions can be there.

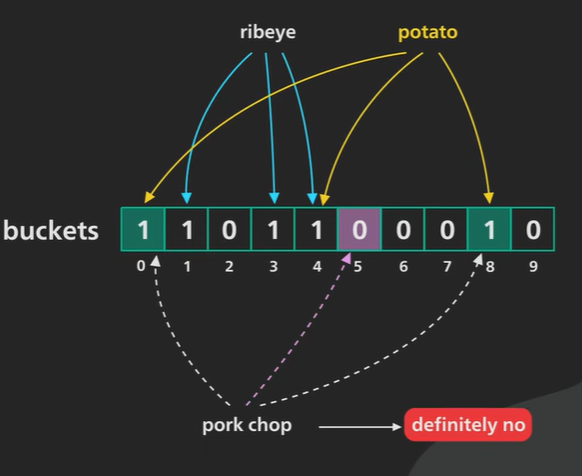

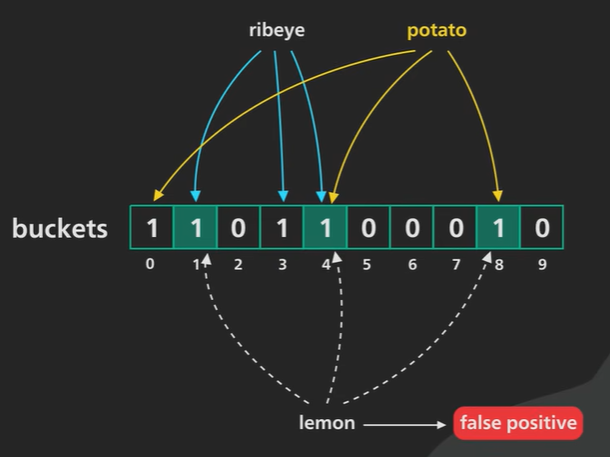

A bloom filter is a large set of buckets where each bucket containing a single bit and they all start with zero.

Example to keep track of the food liked for this example we’ll use a bloom filter with 10 buckets (0-9) and we’ll use three hash functions.

Putting ribeye into the bloom filter the three hash functions return the numbers 1 3 4 these were set the buckets to one.

Potato into the boom filter the hashing function returned to number 0, 4, 8 this time set the value to 1.

Ask bloom filter about ribeye since the same input always hashes to the same output ribeye still hashes to the number 1, 3, 4 bloom filter array value 1. Correct.

Asking about chop the value of the hash say 1, 5, 8 and the value 5 is not 1 in the array so the answer is no and it is correct.

Asking lemon and the hash return 1, 4, 8 and the value are 1 in array and then search happen and lemon not present. It say yes but the value not present. It is a false positive case.

The hash function should be good and the size of the array should be proper to avoid less false positive.

Back Of Envelope Estimation.

https://youtu.be/UC5xf8FbdJc?si=QUtv6DCN5ACTBzoE

Back-of-the-envelope math is a very useful tool in our system design toolbox. In this video, we will go over how and when to use it, and share some tips on using it effectively. Let’s dive right in. Experienced developers use back-of-the-envelope math to quickly sanity-check a design. In these cases, absolute accuracy is not that important. Usually, it is good enough to get within an order of magnitude or two of the actual numbers we are looking for. For example, if the math says at our scale our web service needs to handle 1M requests per second, and each web server could only handle about 10K requests per second, we learn two things quickly: One, we learn that we will need to cluster of web servers, with a load balancer in front of them. Two, we will need about 100 web servers. Another example is if the math shows that the database needs to handle about 10 queries per second at peak, it means that a single database server could handle the load for a while, and there is no need to consider sharding or caching for a while. Now let’s go over some of the most popular numbers to estimate. The most useful by far is requests per second at the service level or queries per second at the database level. Let’s go over the common inputs in a requests-per-second calculation. The first input is DAU, or Daily Active Users. This number should be easy to obtain. Sometimes, the only available number would be Monthly Active Users. In that case, estimate the DAU as a percentage of MAU. The second input is the estimate of the usage per DAU of the service we are designing for. For example, not everyone active on Twitter makes a post. Only a percentage does that. 10%-25% seems to be reasonable. Again, it doesn’t have to be exact. Getting within an order of magnitude is usually fine. The third input is a scaling factor. The usage rate for a service usually has peaks and valleys throughout the day. We need to estimate how much higher the traffic would peak compared to the average. This would reflect the estimated requests-per-second peak where the design could potentially break. For example, for a service like Google Maps, the usage rate during commute hours could be 5 times higher than average. Another example is a ride-sharing service like Uber, where weekend nights could have twice as many rides as average. Now, let’s go over an example. We will estimate the number of Tweets created per second on Twitter. Note that these numbers are made up, and they are not official numbers from Twitter. Let’s assume Twitter has 300 million MAU, and 50% of the MAU use Twitter daily. So that’s 150 million DAU. Next, we estimate that about 25% of Twitter DAU make tweets. And each one on average makes 2 tweets. That is 25% * 2 = 0.5 tweets per DAU. For the scaling factor, we estimate that most people tweet in the morning when they get up and can’t wait to share what they dreamed about the night before. And that spikes the tweet creation traffic to twice the average when the US east coast wakes up. Now we have enough to calculate the peak tweets created per second. We have: 150 million DAU times 0.5 tweet per DAU, times 2x scaling factor divided by 86,400 seconds in a day. That is roughly about 1,500 tweets created per second. Let’s go over the techniques to simplify the calculations. First, we convert all big numbers to scientific notation. Doing the math on really big numbers is very error-prone. By converting big numbers to scientific notation, part of the multiplication becomes simple addition, and division becomes subtraction. In the example above, 150 million DAU becomes 150 times 10 to the sixth or 1.5 times 10 to the eighth. There are 86,400 seconds in a day, we round it up to 100,000 seconds, and that becomes 10 to the fifth seconds. And since it’s a division, 10 to the fifth 5 becomes 10 to the minus fifth. Next, we group all the power of tens together, and then all the other numbers together. So the math becomes: 1.5 times 0.5 times 2 And 10^8 * 10 ^(-5) = 10^(8-5) = 10^3 Putting it all together, it’s 1.5x10^3, or 1,500. Now with practice, we should be able to convert a large number to scientific notation in seconds. And here are some handy conversions we should memorize: As an example, we should know by heart that 10^12 is a trillion or a TB, and when we see a number like 50TB, we should be able to convert it quickly to 5x10^1x10^12, which is 5x10^13. We are going to ignore the fact that 1KB is actually 2^10 bytes, or 1,024 bytes, and not a thousand bytes. We don’t need that degree of accuracy for back-of-the-envelope math. Let’s wrap up by going through one last example. We’ll estimate how much storage is required for storing multimedia files for tweets. We know from the previous example that there are about 150M tweets per day. Now we need an estimate on a percentage of tweets that could contain multimedia content, and how large those files are on average. With our meticulous research, we estimate that 10% of tweets contain pictures, and they are about 100KB each, and 1% of all the tweets contain videos, and they are 100MB each. We further assume that the files are replicated, with 3 copies each, and that Twitter would keep the media for 5 years. Now here is the math. For storing pictures, we have the following: 150M tweets x 0.1 in pictures x 100KB per picture x 400 days in a year x 5 years * 3 copies So, that turns into: 1.510^8 x 10^(-1) x 10^5 x 4x10^2 x 5 x 3 Again, we group the powers of tens together. This becomes: 1.5 times 4 times 5 times 3, which is 90 and 10 to the (8-1+5+2), which is 10^14 And that becomes 9x10^15, which is, from the table, 9 PB. For storing videos, we take yet another shortcut. Since videos on average are 100MB each while pictures are 100KB, a video is 1000 times bigger than a picture on average. Second, only 1% of tweets contain a video, while pictures appear in 10% of all the tweets. So videos are one-tenth as popular. Putting the math together, the total video storage is 1000 x 1/10 of picture storage, which is 100 x 9PB, or 900 PB. In conclusion, back-of-the-envelope math is a very useful tool in our system design toolbox. Don’t over-index on precision. Getting within an order of magnitude is usually enough to inform and validate our design. If you would like to learn more about system design, check out our books and weekly newsletter. Please subscribe if you learned something new. Thank you so much, and we’ll see you next time.

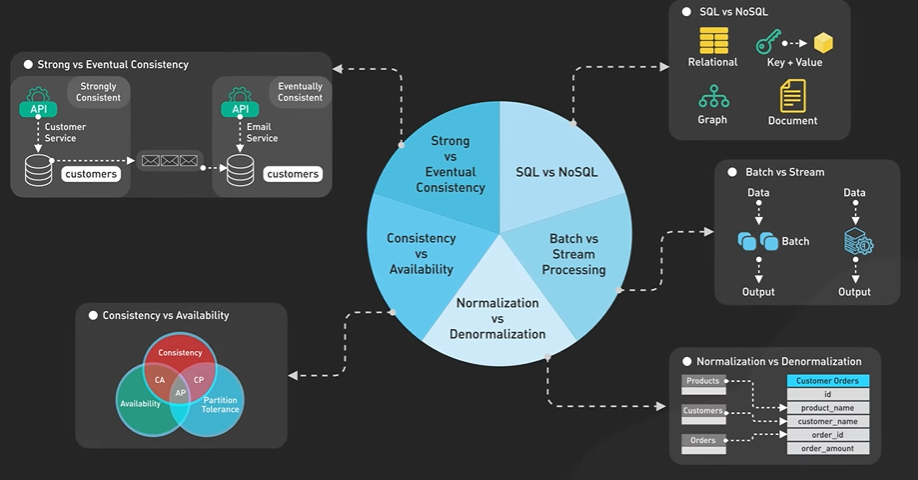

Choose right DB.

How does live streaming platform work.

https://youtu.be/7AMRfNKwuYo?si=cOzaxorGjuqwkeWd

How do these popular live streaming platforms deliver video content from the streamer’s computer to the viewer’s device, or the so-called “glass-to-glass” latency, that is measured in low tens of seconds or faster? Let’s take a look. Live streaming is challenging because the video content is sent over the internet in near real-time. Video processing is compute-intensive. Sending a large volume of video contents over the internet takes time. These factors make live streaming challenging. Let’s take a look at how a video stream goes from the streamer to the viewers. First, the streamer starts their stream. The source could be any video and audio source wired up to an encoder, something like the popular open-source OBS software. Some popular platforms like YouTube provide easy-to-use software to stream from a browser with a webcam, or directly from a mobile phone camera. The job of the encoder is to package the video stream and send it in a transport protocol that the live streaming platform can receive for further processing. The most popular transport protocol is called RTMP, or Real-time Messaging Protocol. RTMP is a TCP-based protocol. It started out a long time ago as the video streaming protocol for Adobe Flash. The encoders can all speak RTMP, or its secure variant called RTMPS. There is a new protocol called SRT, or Secure Reliable Transport, that could start to replace RTMP. SRT is UDP-based and it promises lower latency and better resilience to poor network conditions. However, most of the popular streaming platforms do not yet support SRT. To provide the best upload condition for the streamer, most live streaming platforms provide point-of-presence servers worldwide. The streamer connects to a point-of-presence server closest to them. This usually happens automatically with either DNS latency based routing or an anycast network. Once the stream reaches the point-of-presence server, it is transmitted over a fast and reliable backbone network to the platform for further processing. At the platform, the main goal of this additional processing is to offer the video stream in different qualities and bit-rates. Modem video players automatically choose the best video resolution and bit rate based on the quality of the viewer’s internet connection and can adjust on the fly by requesting different bit rates as the network condition changes. This is called adaptive bitrate streaming. The exact processing steps vary by platform and the output streaming formats. In general, they fall into the following categories. First, the incoming video stream is transcoded to different resolutions and bit-rates - basically different quality levels for the video. The transcoded stream is divided into smaller video segments a few seconds in length. This process is called segmentation. Transcoding is compute-intensive. The input stream is usually transcoded to different formats in parallel, requiring massive compute power. Next, the collections of video segments from the transcoding process are packaged into different live streaming formats that video players can understand. The most common live-streaming format is HLS, or HTTP Live Streaming. HLS was invented by Apple in 2009. It is the most popular streaming format to date. An HLS stream consists of a manifest file, and a series of video chunks, with each chunk containing a video segment as short as a few seconds. The manifest file is a directory to tell the video player what all the output formats are, and where to load the video chunks over HTTP. The resulting HLS manifest and video chunks from the packaging step are cached by the CDN. This reduces the so-called last-mile latency to the viewers. DASH, or Dynamic Adaptive Streaming over HTTP, is another popular streaming format. Apple devices natively do not support DASH. Finally, the video starts to arrive at the viewer’s video player. The “glass-to-glass” latency of around 20 seconds is normal. There are several factors a streamer or the live streaming platform could tune to improve this latency by sacrificing various aspects of the overall video quality. Some platforms simplify this tuning process by providing a coarse knob for the streamer to choose the level of interactivity they desire. The platforms then adjust the quality of the stream based on that input. This is largely a blackbox from the streamer’s perspective. The best thing the streamer could do is optimize their local setup for the lowest latency from the camera to the streaming platform.

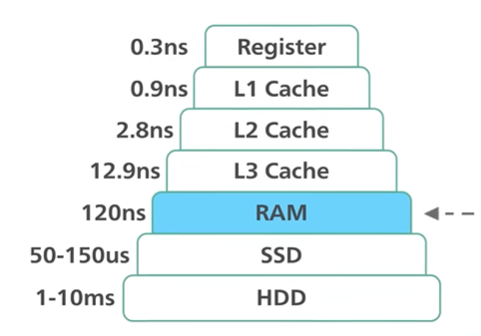

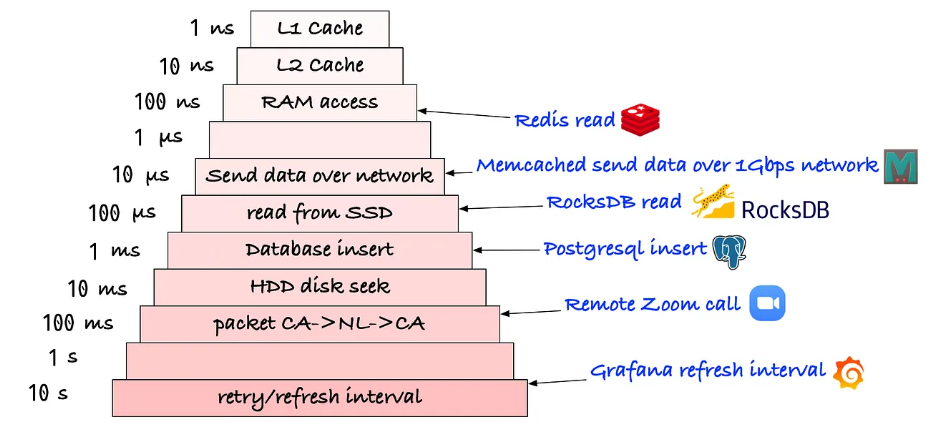

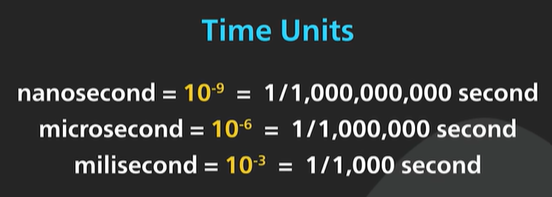

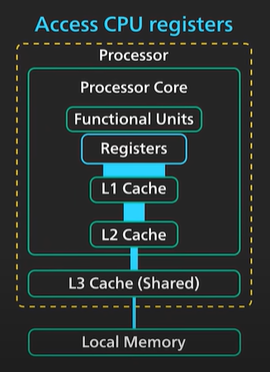

Latency Numbers.

Disk access latency has improved HHD to SSD.

The network latency between countries has not improved as it covers the country and obeys the law of physics.

1 ns - Accessing CPU Register is sub-nanosecond range.

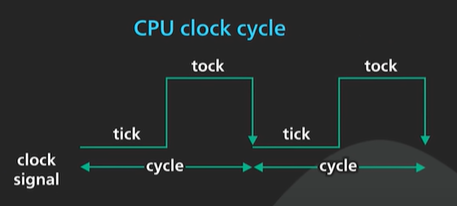

A clock cycle of a modern CPU is in sub-nanosecond range.

1-10 ns - L1 and L2 cache access. Some expensive CPU operations are in the range. Example like Branch Mispredict penalty can be 20 CPU clock cycle and it is in the range.

10-100 ns - L3 cache access is in the fast side of the range. For modern processor like Apple M1 referencing main memory is the slow end at the range.

Main memory access in modern CPU is 100 times slower than CPU register access.

100-1000 ns or 1 μs microsecond - System call. In linux making a simple system call takes several hundred nanoseconds. It is a direct call to trap into the kernel and back. It does not account the cost of executing the system calls.

It takes 200 ns to MD5 hash a 64 bit number.

1-10 μs - It is 1000 times slower than a CPU register access. Context switching in Linus thread takes few micro seconds.

10-100 μs - A network proxy like Nginx would take around 50 microsecond to process a typical http request. Reading 1 MB of data sequentially from a main memory takes around 50 μs. The read latency of SSD takes 100 μs to read 8k page.

100-1000 μs or 1 milliseconds - The SSD write latency is 10 times slower than read latency. Intrazone Network Round trip for modern cloud providers takes few hundred microseconds. A typical name cache already cache operation takes around 1 millisecond.

1-10 ms - Inter zone network round trip of clous takes this range. The seek time of the hard disk drive is about 5 ms.

10-100 ms - The network round trip between US East to US West Coast or the US East Coast and Europe is in this range. Reading of 1 GB sequential from main memory.

100-1000ms - The hash function becrypt is used to encrypt a password and it takes around 300 milliseconds. TLS handshake is in 250-500 ms range. It has several machine round trip so the number depends on the distance between the machines. The network round trip between the US West Coast and Singapore is in this range. Reading 1GB sequentially from SSD is also in this range.

1 s - Transferring 1GB over the network within the same cloud region takes about 10s.

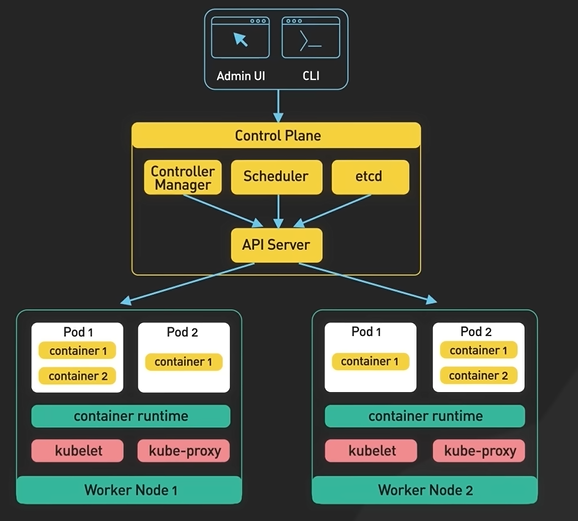

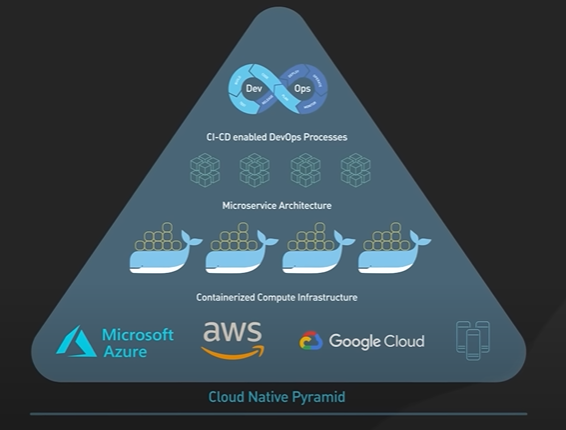

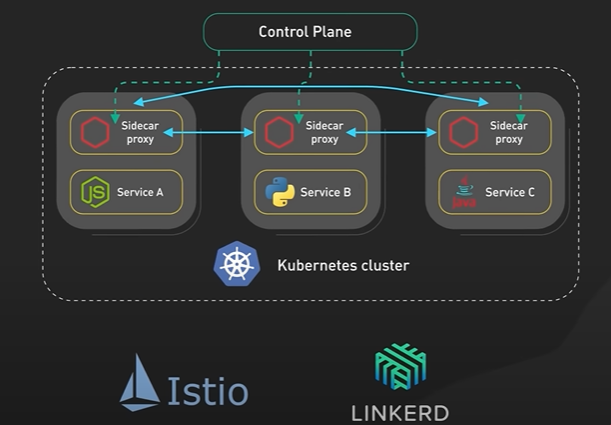

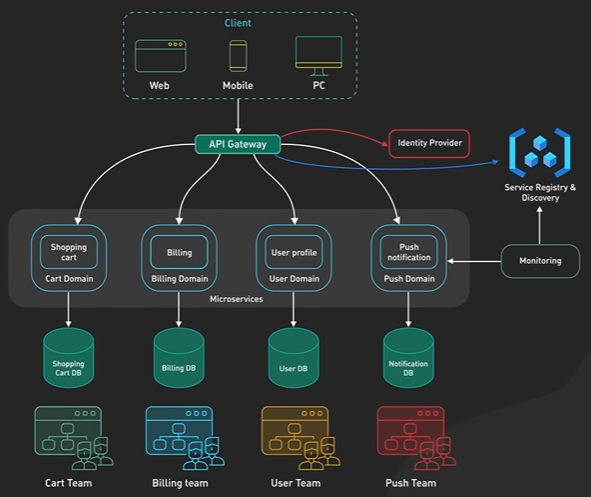

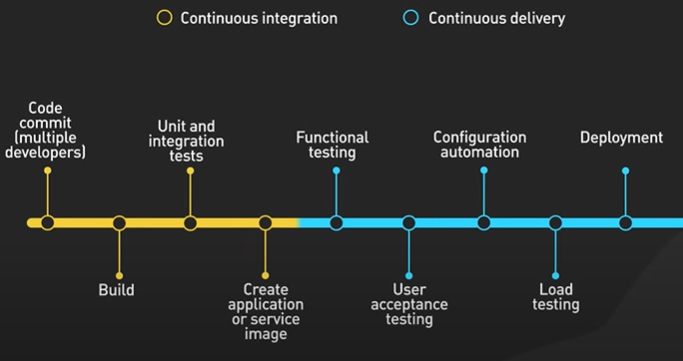

What are Microservice.

https://youtu.be/lTAcCNbJ7KE?si=jMeNl8_zHMZxGxOV

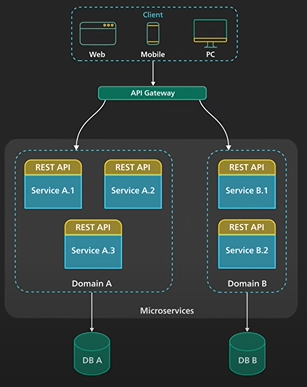

Microservices architecture enables large teams to build scalable applications that are composed of many loosely coupled services. What does a typical microservices architecture look like? And when should we use it? Let’s take a look. Microservices are loosely coupled. Each service handles a dedicated function inside a large-scale application. For example, shopping cart, billing, user profile, push notifications can all be individual microservices. These functional areas are sometimes called domains. Microservices communicate with each other via well-defined interfaces with small surface areas. The small surface areas limit the blast radius of failures and defects. It makes each service easier to reason about in the context of the entire application. Microservices talk to one another over a combination of remote procedure calls (RPC), event streaming, or message brokers. RPC like gRPC provides faster response, but the blast radius, or the impact to other microservices, would be larger when the service was to go down. Event streaming provides better isolation between services but they take longer to process. Microservices can be independently deployed. Since each service is small, easier to reason about, and has a smaller blast radius, this gives the operators peace of mind and confidence to deploy often. Microservices provide more flexibility to scale up individual microservices independently. The operational flexibility is invaluable. Well-architected microservices practice strong information hiding. This often means breaking up a monolithic database into its logical components and keeping each logical component well hidden inside its corresponding microservice. By logical component, it could mean a separate schema within a database cluster or an entirely separate physical database. This is an implementation detail. However, one big drawback of microservices is the breaking up of the database. By breaking up a database into separate logical units, the database can no longer maintain foreign key relationships and enforce referential integrity between these units. The burden of maintaining data integrity is now moved into the application layer. Let’s take a look at other critical components required for a successful implementation of microservices architecture. A key component is an API gateway. API gateway handles incoming requests and routes them to the relevant microservices. The API gateway relies on an identity provider service to handle the authentication and put authorization of each request coming through the API gateway. To locate the service to route an incoming request to, the API gateway consults a service registry and discovery service. Microservices register with this service registry and discover the location of other microservices through the discovery service. There are other useful components in a microservices architecture like monitoring and alerting, DevOps toolings for deployment, and troubleshooting, for example. Let’s wrap up by discussing when to use microservices architecture. Microservices cost money to build and operate. It really only makes sense for large teams. For large teams, it enables team independence. Each domain, or function, can be independently maintained by a dedicated team. In a well-designed microservices architecture, these independent teams can move fast, and the blast radius of failures is well-contained. Each service could be independently designed, deployed, and scaled. However, the overhead of a sound implementation is so large that it is usually not a good fit for small startups. One advice for startups is to design each function in the application with a well-defined interface. One day if the business and team are growing fast that microservices architecture starts to make sense, it would be more manageable to migrate. If you would like to learn more about system design, check out our books and weekly newsletter. Please subscribe if you learned something new. Thank you so much, and we’ll see you next time.

How Apple pay works.

https://youtu.be/cHv8LqkbPHk?si=dsTujVI2Ct1W28Nz

Which one is better? Let’s take a look. We’ll start by stating that both implementations are secure, but the technical details are a bit different. Apple Pay started in 2013. It was a novel idea then. It perfected the concept called tokenization where only a payment token representing the sensitive credit card info is needed to complete a purchase. It required close cooperation from payment networks like VISA and large issuing banks like JPMC to build a new system to support the wide adoption of payment tokens. Google Pay has been around since 2018. Before that, it was known as Android Pay, and before that, Google Wallet. The branding is confusing, and it appears that now Google Pay is Google Wallet again in some countries. We will refer to the current version that uses payment tokenization as Google Pay. Let’s take a look at how these systems work. Both platforms start by collecting sensitive credit card information on the device. It is worth noting that both platforms claim that they don’t store the PAN, or Primary Account Number, on the device itself. The PAN is then sent to the respective servers over HTTPS. At the Apple server, the credit card info is not stored anywhere. It is used to identify the payment network and the issuing bank for the credit card. To simplify, we will refer to the payment network like VISA and the bank that issues the credit card as the bank from here on out. The credit card info is then sent to the bank securely over the network. The bank validates the PAN and returns a token called DAN, or device account number. This number is uniquely generated for use only by the device. The generation of the DAN and the mapping of the DAN to the PAN is usually offloaded to a TSP, or a Token Service Provider. The TSP is where the most sensitive information lives. The DAN is then returned to the Apple server, and the server forwards the DAN to the iPhone where it is stored securely in a special hardware chip called Secure Element. Logically, the Apple server is just a pass-through. It is as if the iPhone directly sends the credit card info to the bank in exchange for the DAN. For Google Pay, this process is a bit different. Like Apple, the PAN is sent securely by the device to the Google server. The Google server uses the PAN to identify the bank. The PAN is then sent to the bank securely over the network. The bank validates the PAN and forwards it to the TSP for a payment token. The token is sometimes called DPAN, or Device PAN. The token is then sent back to the Google server, where it is forwarded to the device for safe storage. There are two points worth mentioning here that are different than Apple. One - The token is not stored in the Secure Element like on the Apple devices. It is stored in the wallet app itself. Two - Apple boasts to never store the payment tokens on its server. Google makes no such claim, and in fact, in its term of service, it states that payment information is stored on its server. We explained so far how each implementation turns the card info into some form of on-device payment token. Let’s take a look at how these tokens are used to make a purchase. For the iPhone, once we click Pay, the DAN is retrieved from the Secure Element and sent to the merchant’s Point-of-sale terminal over NFC, or Near Field Communication. This is really secure, because the DAN is sent directly from the Secure Element to the NFC controller on the device. The NFC controller and the Secure Element communicate with the point-of-sale terminal. The point-of-sale terminal sends the DAN to the merchant’s bank. The merchant’s bank identifies the payment network from the DAN and securely routes the DAN to the payment network. The payment network validates the DAN, then makes a request to the TSP to de-tokenize the DAN back to the original PAN. The PAN is sent securely from the TSP to the bank where the card was issued where the payment is authorized. For Google Pay, the flow is similar once the payment token reaches the point-of-sale terminal. How it gets from the device to the point-of-sale terminal is quite different. Android devices do not store the payment token in the Secure Element. It instead uses something called Host Card Emulation, or HCE. With HCE, the payment token is stored in the wallet app or retrieved at transaction time by the wallet app securely from the cloud. The NFC controller and the wallet app work together to transmit the payment token over NFC to the point-of-sale terminal. At that point, the rest of the transaction is the same as Apple’s. To conclude, both Apple Pay and Google Pay take advantage of the payment tokenization technology. Apple Pay perfected the technology and made it user friendly and secure. Google Pay’s implementation is similar. The main difference is how the payment token is stored, handled, and transmitted on device, and how the payment token could potentially be stored on the Google server. If you like our videos, you might like our weekly system design newsletter as well.

Proxy vs Reverse Proxy.

Nginx is called a reverse proxy.

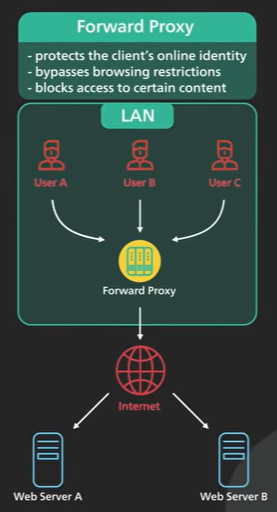

There are 2 common types of proxy a forward proxy and reverse proxy.

Forward proxy is a server and sits between a group of client machines and Internet. When those clients make request to websites on the Internet the forward proxy acts as a middleman intercepts those requests and talks to the web servers on behalf of those client machines.

Why do we do that?

There are a few reasons - Forward proxy protects the clients online identity, the ip address of the client is hidden from the server only the ip address of the proxy is visible it would be harder to trace back to the client.

Forward proxy can be used to bypass browsing restrictions. Some institutions the governments, schools and big businesses use firewalls to restrict access to the Internet by connecting to a forward proxy outside the firewalls the client machine can potentially get around these restrictions.

Forward proxy can be used to block access to certain content. It is not uncommon for schools and businesses to configure the networks to connect all clients to the web through the proxy and apply filtering rules to disallow sites by social networks.

Forward proxy normally requires a client to configure its application to point to it.

Large institutions they usually apply a technique called transparent proxies to streamline the process.

Transparent proxy works with layer 4 switches to redirect certain types of traffic to the proxy automatically there is no need to configure a client machines to use it.

It is difficult to bypass a transparent proxy when the client is on the institution’s network.

Reverse proxy sits between the Internet and the Web servers. It intercepts the reuqets from clients and talk to the server on behalf of the client.

Why would a website use a reverse proxy.

There are few good reasons.

A reverse proxy could be used to protect a website the website’s ip addresses a hidden behind the reverse proxy they’re not revealed to the clients this makes it much harder to target a ddos attack against a website.

A reverse proxy is used for load balancing a popular website handling millions of users every day is unlikely to be able to handle the traffic with a single server a reverse proxy can balance a large amount of incoming request by distributing the traffic to a large pool of web servers and effectively preventing any single one of them from becoming overloaded.

This assumes that the reverse proxy can handle the incoming traffic. Services like Cloudflare put reverse proxy servers in hundreds of locations all around the world this puts a reverse proxy closer to users and at the same time provides a large amount of processing capacity.

A reverse proxy caches static content a piece of content could be cached on the reverse proxy for a period of time if the same piece of content is requested again from the reverse proxy the locally cached version could be quickly.

The reverse proxy can handle ssl encryption ssl handshake is computationally expensive the reverse proxy can free up the origin servers from those expensive operations instead of handling ssl for all clients a website only needs to handle ssl handshake from a small number of reverse proxies.

Layers of Reverse Proc.

The first layer could be an edge service like Cloudflare. The reverse proxies are deployed to hundreds of locations worldwide closer to users.

The second layer could be an api gateway or load balancer at the hosting provider many cloud providers combine these two layers into a single ingress service.

The user will enter the cloud network at the edge closer to the user and from the edge the reverse proxy connects over a fast fibre network to the load balancer where the request is even a distributed over a cluster of web servers.

What is API Gateway.

https://youtu.be/6ULyxuHKxg8?si=H9Y7IPTtUgg3oKy9

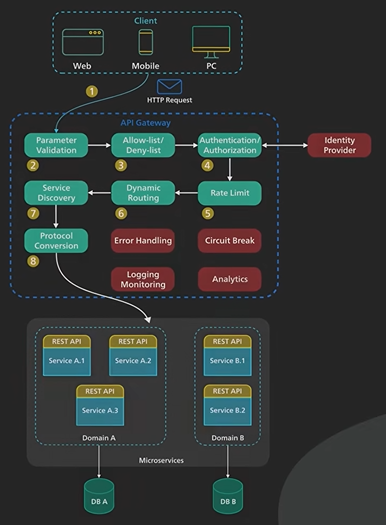

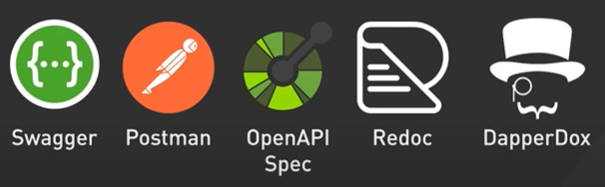

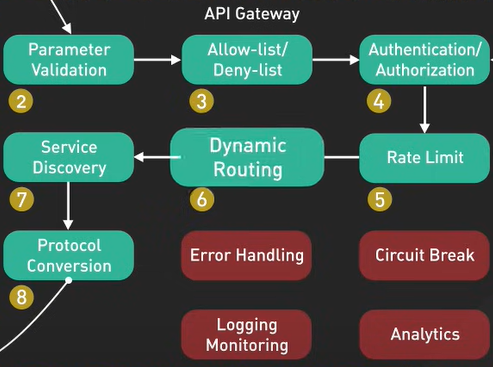

API Gateway uses - Authentication and security policy enforcement, load balancing and circuit breaking, protocol translation and service discovery, monitoring, logging, analytics and billing, caching.

What does an API gateway do? Why do we need it? Let’s take a look. An API gateway is a single point of entry to the clients of an application. It sits between the clients and a collection of backend services for the application. An API gateway typically provides several important functions. Some common ones are: authentication and security policy enforcements, load balancing and circuit breaking, protocol translation and service discovery, monitoring, logging, analytics, and billing. And finally, caching. Let’s examine a typical flow of a client request through the API gateway and onto the backend service. Step 1 - the client sends a request to the API gateway. The request is typically HTTP-based. It could be REST, GraphQL, or some other higher-level abstractions. Step 2 - the API gateway validates the HTTP request. Step 3 - the API gateway checks the caller’s IP address and other HTTP headers against its allow-list and deny-list. It could also perform basic rate limit checks against attributes such as IP address and HTTP headers. For example, it could reject requests from an IP address exceeding a certain rate. Step 4 - the API gateway passes the request to an identity provider for authentication and authorization. This in itself is a complicated topic. The API gateway receives an authenticated session back from the provider with the scope of what the request is allowed to do. Step 5 - a higher level rate-limit check is applied against the authenticated session. If it is over the limit, the request is rejected at this point. Step 6 and 7 - With the help of a service discovery component, the API gateway locates the appropriate backend service to handle the request by path matching. Step 8 - the API gateway transforms the request into the appropriate protocol and sends the transformed request to the backend service. An example would be gRPC. When the response comes back from the backend service, the API gateway transforms the response back to the public-facing protocol and returns the response to the client. A proper API gateway also provides other critical services. For example, an API gateway should track errors and provide circuit-breaking functionality to protect the services from overloading. An API gateway should also provide logging, monitoring, and analytics services for operational observability. An API gateway is a critical piece of the infrastructure. It should be deployed to multiple regions to improve availability. For many cloud provider offerings, the API gateway is deployed across the world close to the clients.

What is GraphQL. REST vs GraphQL.

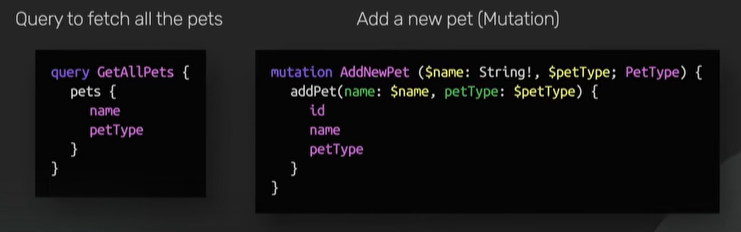

GraphQL is a query language for API developer by Meta.

It provides a scheme of the data in the api and user can ask what they need.

GraphQL sits between the clients and the backend services. It could aggregate multiple resource requests into a single query. It also supports mutations and subscriptions.

Mutations are GraphQL way of applying data modifications to resources.

Subscriptions are GraphQL way for clients to receive notification on data modifications.

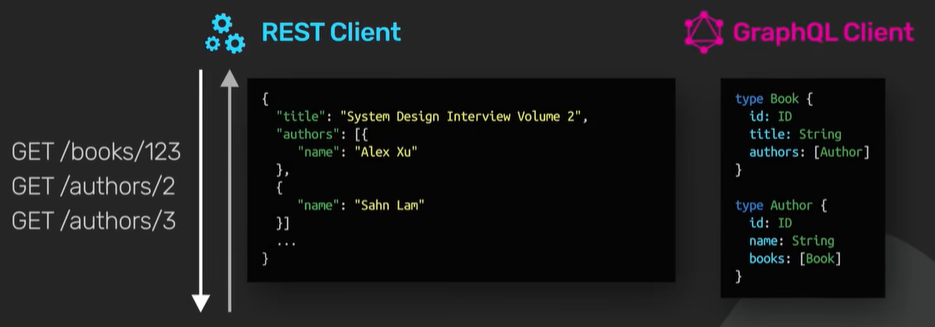

GraphQL and REST both send HTTP request and receive HTTP response.

REST centers around resources each resource is identified by a url. It uses v3/books/123 to fetch a book from the bookstore api.

The authors field is implementation specific some rest api implementations by break them into separate REST call like /authors/3 or authors/5

In GraphQL it looks different we first define the types in this example we have the book and author types.

The types describe the kinds of data available. They don’t specify how the data is retrieved via GraphQL.

To do that we need to define a query type Query{ book(id: ID!): Book }

Now we can send a request to the graph ql endpoints to fetch the same data as we can see rest in graph ql both use http both make a request via a url and both can return adjacent response in the same shape.

REST - GET /books/123 and GraphQL - GET /graphql?query={book(id="123"){title,authors{name}}}

Specify the exact resource book and the field like the title and authors.

In REST the api decided author will be inside a resource. In GraphQL user decide what to include in the response.

GraphQL does not use the URL and it uses the schema.

Rest the work in the client side and say first get the books then the author details and it is a N+1 problem.

Drawback - REST does not need any libraries to consume someone else API. Request can be send using common tools like curl or browser. GraphQL requires heavier tooling support both on the client and server size.

GraphQL + Apollo - schema.geaphql, codegen.yaml, operation.graphql

It is more difficult to cache REST users http get for fetching resources and http get has a well defined caching behaviour that is leveraged by browsers, cdns, proxies and web servers. GraphQL has a single point of entry and uses http post by default this prevents the full use of http caching. With care GraphQL could be configured to better leverage http caching the detail is very nuanced.

While GraphQL allows client to query for just the data they need this also poses a great danger. Example where mobile applications shipped a new feature that causes an unexpected table scan of a critical database table of a backend service. This could bring the database down as soon as the new application goes live. It can be solved but it is complex. It should be monitored before choosing GraphQL.

What is Single Sign On.

SSO is an authentication scheme enables a user to securely access multiple applications and services using a single id.

Integrated into apps like Gmail, Workday or slack it provides a pop up widget or lock in page for the same set of credentials with SSO user can access many apps without having a lock in each time.

SSO is built on a concept called Federated identity.

It enables sharing of identity information across trusted but independent systems.

There are two common protocols for this authentication process - SAML and OpenID.

SAML or Security Assertion Markup Language is an xml based open standard for exchanging identity information between services. It is company found in the work environment.

OpenID connect we use when sign in to Google a box appear. It uses JWT to share identity information between services.

How SSO works.

Using protocol SAML, An office worker visits an application like Gmail in SAML terms Gmail in this example is a service provider.

The Gmail Server detects that the user is from the work domain and returns a SAML authentication request back to the browser.

The browser redirects the user to the identity provider for the company specifying the authentication request okta all zero and one logging are subcommon examples of commercial identity providers the identity provider shows the market page where the user enters the log in the passions once the usage is authenticated the identity provided generates a sample response and returns that to the browser this is called a sample assertion the sample assertion is a cryptographically signed xml document that contains information about the user and what the user can access with the service provider the browser forwards the science and constitution to the service provider now the service provider validate that the assertion was signed by the identity provider this validation is usually done with public key cryptography the service provider returns the protective resource to the browser based on what the user is allowed to access as specified in the sample assertion.

SSO integrated application say work day to work day server as in the previous example with Gmail it has to work domain and sends a sample authentication request back to the browser now the browser again we direct the user to the identity provider the user has already logged in with the identity provider it skips the lock in process and instead generates a sample assertion for work day detailing what the users can access there the sample assertion is returned to the browser and forwarded to Woody woody validates the sign assertion and grants user access accordingly the earlier we mentioned that open ID connect is another common protocol the open ID connect flow is similar to Sam but instead of passing sign excel documents around open id connect passes jwt jwt is assigned Json Docket the implementation details are a little bit different but the overall concept is to say so which one of these ssl methods should we use both implementation are secure for an enterprise environment where it is common to outsource identity management to a commercial identity platform the good news is that many of these platforms provide strong support for both so the decision that depends on the application being integrated and which protocol is easier to integrate with if we are writing a new web application integrating with some of the more popular open id connect platforms like Google Facebook we’ll get them is probably a safe bet.

What is a CDN.

https://youtu.be/RI9np1LWzqw?si=usMw70pdgM0y3mSH

Why should we developers all take advantage of it? Let’s take a look. CDN, or content delivery network, has been around since the late 90s. It was originally developed to speed up the delivery of static HTML content for users all around the world. CDN has evolved over the ensuing decades. Nowadays, a CDN should be used whenever HTTP traffic is served. What can a modern CDN do for us? Let’s take a closer look. At a fundamental level, a CDN brings content closer to the user. This improves the performance of a web service as perceived by the user. It is well-documented that performance is critical to user engagement and retention. To bring service closer to the users, CDN deploys servers at hundreds of locations all over the world. These server locations are called Point of Presence, or PoPs. A server inside the PoP is now commonly called an edge server. Having many PoPs all over the world ensures that every user can reach a fast-edge server close to them. Different CDNs use different technologies to direct a user’s request to the closest PoP. Two common ones are DNS-based routing and Anycast. With DNS-based routing, each PoP has its own IP address. When the user looks up the IP address for the CDN, DNS returns the IP address of the PoP closest to them. With Anycast, all PoPs share the same IP address. When a request comes into the Anycast network for that IP address, the network sends the request to the PoP that is closest to the requester. Each edge server acts as a reverse proxy with a huge content cache. We talked about reverse proxy in an earlier video. Check out the description if you would like to learn more. Static contents are cached on the edge server in this content cache. If a piece of content is in the cache, it could be quickly returned to the user. Since the edge server only asks for a copy of the static content from the origin server if it is not in its cache, this greatly reduces the load and bandwidth requirements of the origin server cluster. A modern CDN could also transform static content into more optimized formats. For example, it could minify Javascript bundles on the fly, or transform an image file from an old format to a modern one like WebP or AVIF. The edge server also serves a very important role in the modern HTTP stack. All TLS connections terminate at the edge server. TLS handshakes are expensive. The commonly used TLS versions like TLS 1.2 take several network round trips to establish. By terminating the TLS connection at the edge, it significantly reduces the latency for the user to establish an encrypted TCP connection. This is one reason why many modern applications send even dynamic uncacheable HTTP content over the CDN. Besides performance, a modern CDN brings two other major benefits. First is security. All modern CDNs have huge network capacity at the edge. This is the key to providing effective DDoS protection against large-scale attacks - by having a network with a capacity much larger than the attackers. This is especially effective with a CDN built on an Anycast network. It allows the CDN to diffuse the attack traffic over a huge number of servers. Second, a modern CDN improves availability. A CDN by its very nature is highly distributed. By having copies of contents available in many PoPs, a CDN can withstand many more hardware failures than the origin servers. A modern CDN provides many benefits. If we are serving HTTP traffic, we should be using a CDN. If you would like to learn more about system design,

What is RPC.

GRPC is an open source remote procedure called framework created by Google in 2016. It was a rewrite of their internal rpc infrastructure that they used for years.

What is an RPC or a Remote Procedure Call?

A local procedure call is a function call within a process to execute some code a remote procedure called enables one machine to invoke some code on another machine as if it is local function call from a user’s perspective.

A GRPC is a popular implementation of RPC many organisations have adopted GRPC as a preferred RPC mechanism to connect a large number of microservices running within and across data centres.

GRPC has a thriving developer ecosystem it makes it very easy to develop production quality and type saving apis that scale well the core of this ecosystem is to use a protocol buffers as its data interchange format.

Protocol buffers is a language agnostic that platform agnostic mechanism for encoding structured data gfc uses protocol buffers to encode and send data over the wires by default while GRPC could support other encoding formats like Json protocol buffers provide several advantages that makes it the encoding format of choice for GRPC protocol buffer support strongly typed schema definition the structure of the data over the wire is defined in a prototype protocol buffers provide broad tooling support to turn their schema defying the portal file into data access classes for all popular programming languages a gopc service is also defined in a profile by specifying all pc method parameters and return types the same tooling is used to generate grpc client and server code from the profile developers use these generator classes in the client to make rpc calls and in the server to fulfil the rpc request by supporting many programming languages the client and server can independent No he choose the programming language and ecosystem best suited for their own particular use cases this is traditionally not the case for most other C frameworks the second reason why GRPC is so popular is because it is high performance out of the box 2 factors contributes to his performance first is the protocol buffer is very efficient binary encoding format it is much faster than Jason 2nd GMPC is built on top of HGTP 2 to provide a high performance foundation at scale the use of HTTP2 brings many benefits we discussed HTTP2 in an earlier video cheque out the link in the description for more information gfc uses http 2 streams it allows multiple streams of messages over a single long leaf tcp connexion this allows the GRPC framework to handle many concurrent RPC calls over a small number of tcp connexions between clients and servers to understand how.